Okay, I’ll admit, I am pretty biased when it comes to how people within organizations work together to ensure successful data projects. I have been involved in too many projects that failed to take into account the importance of collaboration across departments and functions. They were stuck on data and only the data. They may have thought they knew what they wanted to achieve but didn’t always know the best way to get there or the implications of tactical planning without looking at the bigger picture.

Obviously data is what drives business value. But without an understanding of how data flows across disparate systems support operations, building the right data pipelines as part of overall data management and analytics initiative becomes more challenging.

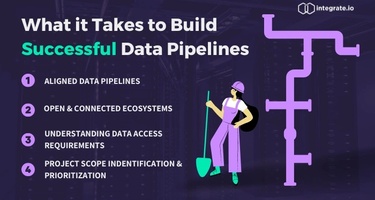

The goal of any technology project is successful implementation, low TCO and high ROI. Within data integration, building out the right data pipelines means having an understanding of outcomes. How data is interconnected, what silos exist and what type of information visibility do people across the organization need to be successful. This requires:

- Project scope identification and prioritization: Data teams understand the plumbing but sometimes need more insight into what different teams across the organization are doing with the data and how it helps them achieve their goals. Prioritizing projects and creating an approach to data pipelines that support business outcomes end up enhancing overall value of data integration and the perception of data value within the organization.

- Aligning data pipelines with broader data management initiatives: An organization should create a data strategy that takes into account data governance, compliance, security, integration, and platform requirements. It is not enough to look at individual data sources and metrics required. Dependencies and business rule complexities should be identified and that requires interactions across the organization.

- An open and connected data ecosystem: Ensuring openness is really important. Many systems do not natively interoperate. This is changing as more platforms want to ensure flexibility for their customers. Data teams need to make sure that they build an understanding of which systems are accessed, how data is used, and how business decisions are made leveraging data assets. This also helps provide insight into opportunities and gaps.

- Understanding data access requirements: Once value is defined, it becomes important to understand the target audience. It is fine to build data pipelines, but even within those, the outcomes will be different. Whether pipelines are built for operations or analytics, business users need flexibility. This needs to be added to the requirements gathering process as data needs will continue to shift so any integration project will require agility.

These four considerations provide the starting point. Basically, a data project is never just about the data pipelines being built. Success requires an understanding of broader data access requirements and business outcomes. For longer-term success, a holistic view across the organization, that takes into account both business and data needs, are essential.