Becoming a data-driven organization is a vital goal for businesses of all sizes and industries—but this is easier said than done. Too many companies fail to attain the fundamental principle of data observability: knowing the existence and status of all the enterprise data at their fingertips.

The five things you need to know about data observability in a data pipeline:

- Data observability is concerned with the overall visibility, status, and health of an organization’s data.

- Data observability is closely linked to other aspects of data governance, such as data quality and data reliability.

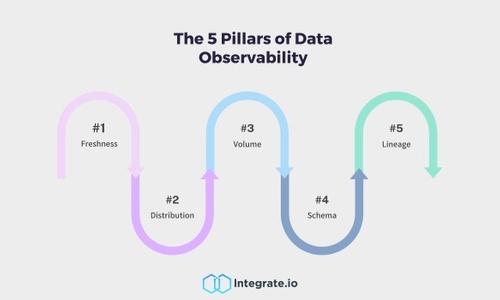

- The “five pillars” of data observability are freshness, distribution, volume, schema, and lineage.

- Data observability tools are used by organizations to monitor their enterprise data and detect and resolve any issues.

- Implementing data observability helps users get a complete picture of their information while reducing data downtime.

For the most effective analytics and decision-making, the information inside your data pipelines must be observable at all times. So what is data observability in a data pipeline? What are the five “pillars” of data observability, and why are they so important to achieve? We’ll answer these questions and more in this article.

What Is a Data Pipeline?

In the field of data integration, a data pipeline is an end-to-end series of multiple steps for aggregating information from one or more data sets and moving it to a destination. The term is meant to recall oil and gas pipelines in the energy industry, moving raw material from one place to another—just as raw data moves through a data pipeline.

Modern data pipelines are frequently used in the contexts of business intelligence and data analytics. The outputs of this process are analytical insights in the form of dashboards, reports, and visualizations to help enable smarter business decision-making.

In this use case, the raw data volumes are located in data sources of interest to the organization, such as databases, websites, files, and software platforms. These sources may be located inside a company’s IT ecosystem, or they may be external third-party resources. The destination of these data pipelines is typically a single centralized repository purpose-built for storing and analyzing large amounts of information, such as a data warehouse (like Snowflake) or data lake.

Along the way, transformations may be applied to raw data to cleanse it and improve its data quality and data integrity. Transformations may also be necessary to prepare the data for storage by fitting it into the target repository’s schema. If the process occurs exactly in this order—extraction, transformation, and loading—it is known as ETL.

Other variants of ETL may switch around these steps. For example, ELT (extraction, loading, transformation) moves data into the destination before applying any transformations. Data integration (sometimes called data ingestion) is a catch-all term for ETL, ELT, and other data pipeline formats.

The necessary components of a data pipeline are:

- Sources: One or more locations storing raw data, such as a database table or a CRM (customer relationship management) platform.

- Destination: The endpoint of the data pipeline. Information may be stored in a dedicated repository, such as a data warehouse, or fed directly into BI and analytics tools.

- Flow: The path of the raw data as it travels from source to destination, including any intermediate changes or transformations.

- Processing: The method(s) for extracting and transforming data. Popular types of data processing include batch processing, stream (real-time) processing, and distributed processing.

- Workflow: The order in which a data pipeline runs multiple processing jobs. This order is affected by factors such as urgency and dependencies. For example, the output of one job may be used as the input for another.

- Monitoring: Users must constantly monitor data in the pipeline to detect any data quality issues. Data monitoring is also important to identify and resolve any data downtime, bottlenecks, or outages in the pipeline.

As we’ll discuss below, this last notion—monitoring—is essential to the practice of data observability.

What Is Data Observability?

Data observability refers to a company’s ability to observe all of the information that exists within the organization. This includes the data’s existence and the data’s state of health.

As discussed above, the information useful to a business may be scattered across a variety of data systems and software. These include:

- Relational and non-relational (SQL and NoSQL) databases

- CRM (customer relationship management) software

- ERP (enterprise resource planning) solutions

- SaaS (software as a service) tools

- Ecommerce and marketing platforms

- Social media

To achieve data observability, companies must know the locations of the enterprise data that they rely on. Knowing these data sources is essential to perform data integration, moving information into a target repository for more effective analytics. This includes breaking down data silos, and repositories of valuable information that are not available to all the people and teams who could benefit.

Not only the presence but also the status and health of your data sources are crucial for data pipeline observability. If your information is corrupted, inaccessible, missing, or incorrect, the end results in the form of business intelligence and analytical insights will be inferior or misleading. The notion of data observability is closely linked to other components of data governance, such as data quality (ensuring information is accurate and up-to-date) and data reliability (making information available to the right people when it needs to be).

To achieve the goal of data observability, businesses often rely on data observability tools. These tools help companies with the tasks of data monitoring and management, saving countless hours of manual effort. Organizations that use data observability platforms should look for providers with the features and functionality below:

- Seamless integration: The data observability tool should be able to fit neatly into your data stack, including the databases, services, and platforms that you already use. This ensures that you won’t waste hours manually writing code or making changes to your existing setup.

- In-place monitoring: Data observability platforms should be able to monitor information at rest, without having to extract it from its storage location. This has a number of benefits, from performance and scalability to data security and compliance (by ensuring that you are not needlessly exposing sensitive information).

- User-friendliness: Businesses of all stripes can benefit from stronger data observability, which means that data observability tools must be accessible to technical and non-technical users. For example, a data observability platform might use artificial intelligence and machine learning to understand the baseline performance of your IT environment. Then, it can perform anomaly detection to identify when an error or issue arises.

The 5 Pillars of Data Observability

The notion of data observability is heavily inspired by the field of DevOps, which seeks to optimize the software development lifecycle by uniting development and IT operations. DevOps has defined the concept of the “three pillars of observability,” three sources of information that help DevOps professionals detect the root causes of IT problems and use troubleshooting to resolve them.

The three pillars of observability in DevOps are:

- Metrics: Organizations can use many different metrics and key performance indicators (KPIs) to understand the health of their systems. For example, if the DevOps team is monitoring a website, the key metrics to observe might include the number of concurrent users and the server’s response time. By establishing a baseline for these metrics, teams can more easily perform anomaly detection when events deviate from this baseline.

- Logs: Servers, software, and endpoints all maintain logs that contain data and metadata about the activities and operations occurring within the system. Logs may be stored in plaintext, binary, or structured (e.g., JSON or XML) format. Consulting these logs can help DevOps teams answer factual questions about a system’s usage and access, as well as the root cause of any failures or errors.

- Tracing: In recent years, distributed systems (multiple computers connected on a network and working on the same task) have surged in popularity. Traces are a version of logs that encode how an operation flowed between various nodes in a distributed system. Using traces makes it easier for DevOps teams to debug requests that touch multiple nodes in the system.

Just as DevOps seeks to improve software development, the new field of “DataOps” (data operations) seeks to improve business intelligence and analytics. In DataOps, data teams consisting of data scientists and data engineers work closely with non-technical business users to improve data quality and analytical insights.

Borrowing from DevOps, data engineering teams have formulated their own “pillars of observability,” increasing the number from three to five. These pillars are abstract, high-level ideas, letting businesses decide how to implement them in terms of concrete metrics. Below, we’ll discuss each of these five pillars of data observability in greater detail.

1. Freshness

Freshness is concerned with how “fresh” or up-to-date an organization’s data is. Having access to the latest, most accurate information is crucial for better decision-making. Questions such as “How recent is this data?” and “How frequently is this data updated?” are essential for this pillar.

2. Distribution

Distribution uses data profiling to examine whether an organization’s data is “as expected,” or falls within an expected level or range. For example, if a data set about user requests reveals that an unexpectedly high number of requests are timing out, then this is likely an indication that something is wrong with the underlying system. Strange or anomalous values may also signal that the data source is poor-quality, unvalidated, or untrustworthy.

3. Volume

Volume focuses on the sheer amount or quantity of data that an organization has at its fingertips. This figure should generally stay constant or increase unless data is deleted for being inaccurate, out-of-date, or irrelevant. Monitoring data volume also ensures that the business is effectively intaking data at the expected rate (for example, from real-time streaming sources such as Internet of Things devices).

4. Schema

Schema refers to the abstract design or structure of a database or table that formalizes how the information is laid out within this repository. Businesses must keep a watchful eye on their database schemas to verify that the data within remains accessible. Changes to a schema could break the database and may indicate accidental errors or even malicious attacks.

5. Lineage

Last but not least, the pillar of lineage offers a complete overview of the entire data “landscape” or ecosystem within an organization. Data lineage helps users understand the flow of information throughout the business, building a holistic picture to help with troubleshooting and resolving issues.

Why Is Data Observability Important in a Data Pipeline?

Data pipeline observability is a crucial practice for truly data-driven organizations. Below are just a few good reasons why businesses must implement data observability for their data pipelines:

- Getting the full picture: Companies that prioritize data observability are better able to see where their data is stored and break down any data silos. In turn, this lets teams and departments understand how they can use data most effectively in their BI and analytics workloads.

- Capturing data changes: As the business landscape evolves around you, the data you keep is expected to change with it. Data observability provides context to any changes made to your information, allowing you to adapt with the times.

- Diagnosing problems: Things don’t always go smoothly when making changes to your enterprise data. With data observability, data teams can detect problems early and maintain the high quality of their data sets, avoiding any issues with the final analytical results and insights.

- Reducing downtime: “Data downtime” refers to periods of time when your enterprise data is not at its optimal quality—whether information is missing, or the database has crashed. Through concepts such as the “five pillars,” data observability offers a means for businesses to check for data quality issues and limit downtime as much as possible.

How Integrate.io Can Help With Data Observability

To be at their most effective, data observability tools require companies to have a solid data integration strategy in place. Integrate.io is a powerful, feature-rich yet user-friendly ETL and data integration tool. The Integrate.io platform is based in the cloud and has been built from the ground up for the needs of Ecommerce businesses.

With Integrate.io’s no-code, drag-and-drop interface, it’s never been easier for users of any background or skill level to start defining lightning-fast data pipelines. To make things even simpler, Integrate.io provides more than 140 pre-built connectors and integrations for the most popular data sources and destinations, including databases, data warehouses, SaaS platforms, and marketing tools.

Integrate.io comes with a wide range of features and functionality that help companies extract the most value from their enterprise data. Users can easily detect exactly which data records and sources have changed since their most recent integration job, thanks to Integrate.io FlyData CDC (change data capture). What’s more, Integrate.io makes it easy to perform reverse ETL, sending information out of a centralized repository and into third-party data systems for easier access and analysis.