Discover how the Hadoop Distributed File System (HDFS) revolutionizes big data processing by efficiently storing and retrieving massive amounts of data across distributed computing clusters. This comprehensive guide offers an in-depth overview of HDFS, uncovering its inner workings and exploring its powerful applications for big data processing. Learn essential best practices to maximize the potential of HDFS, tackle common challenges, and gain valuable insights for a successful implementation.

Key Takeaways from the Guide

- HDFS is a key component of Hadoop, offering reliable storage through data replication.

- ETL tools are essential for the processing and transforming data in HDFS

- HDFS integrates with big data frameworks and supports batch processing.

- It ensures scalability, fault tolerance, and cost-effectiveness.

- Read and write operations are managed by the NameNode and executed by DataNodes.

- Data replication provides fault tolerance and redundancy.

- HDFS is widely used for scalable storage and efficient data analysis in big data processing.

Introduction

In terms of the Hadoop ecosystem and HDFS, it's important to understand their relationship. Apache Hadoop is an open-source framework that encompasses various components for storing, processing, and analyzing data. On the other hand, HDFS is the file system component of the Hadoop ecosystem, responsible for data storage and retrieval. In simpler terms, HDFS is a module within the broader Apache Hadoop framework.

Hadoop was initially created by Doug Cutting and Mike Cafarella, who was working at Yahoo at the time. Yahoo's involvement and contributions have helped shape HDFS into an open-source and scalable distributed file system, making it a key component of the Apache Hadoop framework.

Role of HDFS in the Big Data Processing

HDFS is a vital component of the Hadoop ecosystem, playing a critical role in efficient big data processing. HDFS enables reliable storage and management. It ensures parallel processing and optimized data storage, resulting in faster data access and analysis.

In Big data processing, HDFS excels at providing fault-tolerant storage for large datasets. It achieves this through data replication. It can store and manage large volumes of structured and unstructured data in a data warehouse environment. Moreover, it seamlessly integrates with leading big data processing frameworks, such as Apache Spark, Hive, Pig, and Flink, enabling scalable and efficient data processing. It is compatible with Unix-based (Linux operating systems), making it an ideal choice for organizations that prefer using Linux-based environments for their big data processing.

HDFS empowers organizations to handle massive data volumes and perform complex data analytics. Data scientists, analysts, and businesses can leverage it to extract valuable insights, make data-driven decisions, and uncover hidden patterns within their extensive datasets. Learn more about Big Data.

Importance of ETL (Extract, Transform, Load) tools for Big Data Processing with HDFS

The importance of the ETL for Big Data Processing with HDFS is as follows:

- Streamline the process of extracting data from various sources, transforming it, and loading it into HDFS.

- ETL tools enable data cleaning, enrichment, and ensuring data consistency and quality.

- They provide workflow orchestration capabilities, managing complex data pipelines efficiently.

- Offer data governance features, ensuring compliance, security, and data lineage tracking.

- By leveraging ETL tools with HDFS, organizations can efficiently process and analyze large data.

- The combination of ETL tools and HDFS empowers informed decision-making through insightful data analysis.

What is Hadoop Distributed File System (HDFS)?

Hadoop Distributed File System (HDFS) is a distributed storage system designed for managing large data sets across multiple machines in a Hadoop cluster. It provides fast access to data and is useful for handling large datasets in the Apache Hadoop ecosystem.

HDFS offers a cost-effective storage solution by using standard hardware and distributed computing. It allows organizations to expand their storage capacity without incurring high expenses, making it an affordable choice for managing large amounts of data.

Furthermore, HDFS integrates with various cloud providers and offers flexible pricing options. This enables organizations to optimize their storage costs based on their specific requirements. For example, Amazon Web Services (AWS) provides HDFS as a service through Amazon EMR, allowing users to leverage HDFS for storing and processing data on the AWS cloud infrastructure.

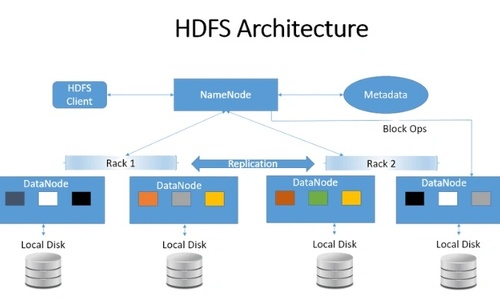

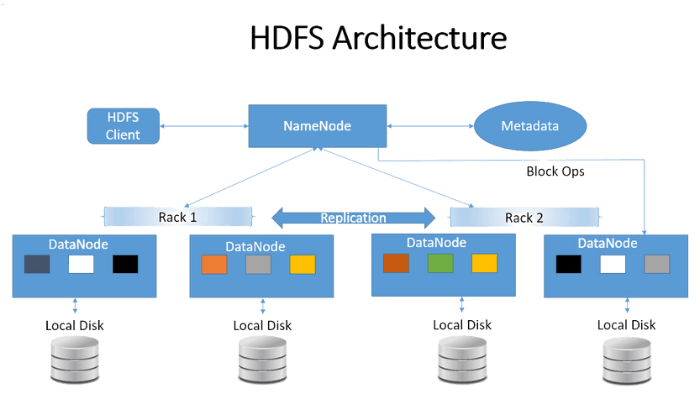

HDFS Architecture

HDFS is primarily written in Java, making Java programming language an integral part of its architecture and functionality. Java provides the foundation for HDFS's core components such as NameNode, DataNode, and various client interfaces. However, Python can also be utilized in conjunction with HDFS through libraries and frameworks such as PySpark. PySpark provides a Python API for interacting with HDFS, allowing users to perform data processing, analysis, and machine learning tasks on HDFS data using Python.

The HDFS follows a master-slave architecture, consisting of several key elements. Let's explore these elements:

-

NameNode: The NameNode serves as the central metadata management node in HDFS. It stores crucial information about the file system's directory structure, file names, and attributes. The NameNode tracks the location of data blocks across DataNodes and orchestrates file operations, including reading, writing, and replication.

Within the NameNode component, there are two types of files:

-

FsImage Files: FsImage files act as a storage system It contains a comprehensive and organized representation of the file system's structure. These files capture a complete snapshot of the hierarchical layout within HDFS.

-

EditLogs Files: EditLogs files track all modifications made to the filesystem's files. They serve as a log, recording changes and updates made over time.

In the context of HDFS, ZooKeeper plays an important role in maintaining the state and metadata information of the Hadoop cluster. It provides reliable and distributed coordination services, ensuring the high availability and consistency of critical components like the NameNode and DataNodes in HDFS.

-

DataNode: DataNodes stores the actual data blocks of files. They receive instructions from the NameNode for read and write operations. It ensures fault tolerance through data replication. DataNodes regularly send heartbeat messages every 3 seconds to the NameNode to indicate their operational status. If a DataNode becomes inoperative, the NameNode initiates replication and assigns the blocks to other DataNodes for data redundancy and fault tolerance.

-

File Blocks in HDFS: In HDFS, data is divided into blocks for optimized storage and retrieval. By default, each block has a size of 128 MB, although this can be adjusted as needed. For example, if we have a file with a size of 550 MB, it will be divided into 5 blocks: the first four blocks will be 128 MB each, and the fifth block will be 38 MB.

File Blocks

By understanding the block size and its impact on the file storage system, you can optimize the data processing.

-

Secondary NameNode: Secondary NameNode in HDFS is a helper node that performs periodic checkpoints of the namespace. Secondary NameNode helps keep the size of the log file containing HDFS modifications within limits and assists in faster recovery of the NameNode in case of failures.

-

Metadata management: In HDFS, the NameNode loads block information into memory during startup, updates the metadata with Edit Log data, and creates checkpoints. The size of metadata is limited by NameNode's RAM.

Comparison to traditional file systems

When compared to traditional file systems, HDFS offers several advantages for big data processing:

- Scalability: Offers scalability by distributing data across multiple machines in a cluster.

- Fault Tolerance: Fault tolerance is achieved through data replication.

- Data Processing: Optimized for big data processing, supporting batch processing frameworks like MapReduce for parallel processing and efficient resource utilization.

- Cost-Effectiveness: A cost-effective solution as it runs on commodity hardware and leverages the distributed nature of the cluster for high throughput and performance.

- Explore Data Science: Enables data science workflows by providing efficient storage and processing of large datasets, allowing data scientists to perform data preprocessing, feature extraction, and training of machine learning algorithms on distributed computing clusters.

How does HDFS work?

HDFS enables scalable storage, retrieval, and processing of data through its distributed and fault-tolerant design. It optimizes read and write operations, ensures data redundancy, and incorporates algorithms for efficient data management. It boosts big data analytics by distributing data across clusters. Key aspects of HDFS functionality include:

HDFS Read and Write Operations

HDFS enables seamless and scalable read/write operations through distributed DataNodes and metadata management.

Write Operation

When writing a file to HDFS, the client communicates with the NameNode for metadata. The client interacts directly with DataNodes based on the metadata received. For example, when writing block X, it is sent to DataNode A and replicated to DataNode B in a different data center and DataNode C in the same rack as DataNode A. DataNodes send write confirmations to the NameNode, ensuring data reliability and fault tolerance.

Read Operation

To read from HDFS, the client gets metadata from the NameNode, which provides DataNode locations. The client interacts with those DataNodes, reading data in parallel.

The data flows directly from the DataNodes to the client, enabling efficient and concurrent data retrieval.

Once all blocks are received, the client combines them for further processing.

Note: Hbase is a part of the Hadoop ecosystem that provides random real-time read/write access to data in the HDFS. One can store the data in HDFS either directly or through HBase. Data consumer reads/accesses the data in HDFS randomly using HBase. HBase sits on top of the HDFS and provides read and write access.

Data Replication and Fault Tolerance

HDFS ensures data reliability through replication. Data blocks are replicated across multiple DataNodes, enhancing fault tolerance and availability.

HDFS Replication Management: Ensuring Fault-Tolerance and Data Redundancy

Data replication ensures fault tolerance and accessibility. For example, if a block has a replication factor of 3, three copies are stored on separate DataNodes. If one DataNode fails, data can still be accessed from the remaining two replicas. This redundancy enhances data protection, availability, and reliability. By implementing this replication mechanism, HDFS ensures continuous access to data, strengthens data integrity, and improves fault tolerance within the Hadoop ecosystem. It enables organizations to handle large-scale data storage and processing.

Enhancing Fault Tolerance in HDFS: Replication and Erasure Coding

HDFS is known for its robust fault tolerance capabilities, ensuring data availability and reliability. It achieves fault tolerance through replica creation and Erasure Coding. Replicas of user data are created on different machines, allowing data accessibility even if a machine fails.

Hadoop 3 introduced Erasure Coding, improving storage efficiency while maintaining fault tolerance. The combination of replication and Erasure Coding makes HDFS highly resilient and reliable for large-scale data storage and processing.

NameNode and DataNode Functions in HDFS

HDFS employs a distributed HDFS Architecture with two essential components: the NameNode and DataNode. Each plays a distinct role in managing and storing data within the HDFS cluster.

NameNode

The NameNode in HDFS has important roles:

- File System Namespace Management: The NameNode maintains the file system namespace and metadata of files and directories. It tracks attributes such as file permissions, timestamps, and block locations.

- Block Management: The NameNode manages the mapping of files to data blocks. It keeps track of which DataNodes store the data blocks and ensures data reliability through replication.

- Client Coordination: It acts as the intermediary between clients and DataNodes. It handles client requests for file operations, such as read, write, and delete. NameNode handles metadata and guides clients to the relevant DataNodes for accessing the required data.

DataNode

DataNodes are responsible for storing and managing data within HDFS. Their key functions include:

- Block Storage: DataNodes store data blocks of files on their local storage.

- Block Replication: They replicate data blocks to ensure fault tolerance.

- Heartbeat and Block Reports: DataNodes regularly send heartbeat signals and block reports to the NameNode.

Dividing responsibilities between the NameNode and DataNodes enables scalability, fault tolerance, and efficient data management in HDFS. The NameNode focuses on metadata coordination, while DataNodes handle data storage and replication operations.

HDFS for Big Data Processing

HDFS is essential for reliable storage and efficient big data processing. Here are its key aspects:

Understanding the MapReduce Programming Model

The MapReduce programming model is a key component of the Apache Hadoop framework and is designed to process large volumes of data in parallel across a cluster of computers. It consists of two main stages: the map stage and the reduce stage. Here's an overview of the MapReduce programming model:

MapReduce Components

- Map Stage: In this stage, the input data is divided into chunks and processed in parallel by multiple map tasks. Each map task takes a portion of the input data and applies a user-defined map function to generate key-value pairs as intermediate outputs.

- Shuffle and Sort: The intermediate key-value pairs generated by the map tasks are then sorted and grouped based on their keys. This step ensures that all values associated with the same key are brought together.

- Reduce Stage: In this stage, the sorted intermediate key-value pairs are processed by multiple reduce tasks. Each reduce task takes a group of key-value pairs with the same key and applies a user-defined reduce function to produce the final output.

- Output: The final output of the reduce tasks is collected and stored in the HDFS or another designated output location.

The MapReduce programming model provides a high-level abstraction for distributed data processing. It enables developers to write parallel and scalable data processing jobs easily. It allows for efficient utilization of the cluster's resources and handles various aspects such as data partitioning, distribution, and fault tolerance.

Best Practices for Utilizing HDFS in Big Data Processing

Explore HDFS best practices for big data processing: optimize storage, ensure fault tolerance, enhance performance, and leverage integration with other technologies.

-

Data Storage and Organization:

- Breaking down data into smaller, manageable chunks for efficient processing.

- Utilizing appropriate file formats, compression techniques, and data partitioning strategies.

- Optimizing block size and replication factor based on workload characteristics.

-

Fault Tolerance and Data Recovery:

- Ensuring high availability by configuring Namenode high availability (HA) setup.

- Regularly backing up metadata and maintaining secondary Namenodes.

- Implementing strategies for data replication and ensuring data durability.

-

Maximizing Data Locality:

- Importance of placing data and computation in proximity within the HDFS cluster.

- Optimize data placement to reduce network transfers and data flow.

- Utilizing HDFS block placement policies to ensure data locality.

-

Security and Access Control:

- Implementing robust security measures, such as authentication and authorization.

- Utilizing HDFS encryption for data protection during storage and transmission.

- Defining access control policies to restrict unauthorized access to data.

-

Monitoring and Maintenance:

- Use robust monitoring tools such as Cloudera Manager to monitor HDFS cluster health and performance.

- Regularly monitoring and assessing the health and performance of your HDFS cluster.

- Performing routine maintenance tasks, including garbage collection and log rotation.

-

Integration with Big Data Ecosystem:

- Exploring the integration of HDFS with other Hadoop ecosystem components, such as MapReduce, YARN, Pig, and Hive.

- Leveraging complementary tools like Apache Spark and Apache Kafka for advanced data processing.

- Choose data mining algorithms that are suitable for distributed processing and can effectively handle the scale and complexity of the data stored in HDFS.

- Understanding the role of HDFS in supporting data ingestion and data exchange with external systems.

Integration with ETL Tools for Data Processing and Transformation

When HDFS is integrated with Extract, Transform, Load (ETL) tools, it enables efficient data processing and transformation workflows. Here's how the integration between HDFS and ETL tools enhances data processing and transformation in big data environments:

- Data Storage and Accessibility: ETL tools can seamlessly access data stored in HDFS, eliminating the need for data movement or replication.

- Distributed Processing: ETL tools can leverage Hadoop's distributed computing capabilities to process data in parallel, improving performance and enabling faster data transformations.

- Scalability: HDFS combined with ETL tools allows organizations to process and transform large data in a scalable manner.

- Data Extraction and Loading: Extract data from various sources, transform it, and load it back into HDFS or other target systems.

- Data Transformation and Enrichment: Enable complex transformations on data in HDFS for valuable insights and downstream analytics.

- Data Quality and Governance: ETL tools integrated with HDFS ensure data quality and governance compliance.

- Workflow Orchestration: Orchestrate Hadoop tasks in data workflows seamlessly.

Common Challenges with HDFS

HDFS is a powerful tool for big data processing and storage, but it also presents some challenges that organizations need to address. Here are three common challenges associated with HDFS:

- Scalability and Performance Issues: As the volume of data increases, ensuring scalability and optimal performance becomes crucial. Managing a large HDFS cluster with thousands of nodes and petabytes of data requires careful configuration, load balancing, and monitoring. Inefficient data distribution, network bottlenecks, and hardware limitations can impact overall performance.

- Security and Access Control: HDFS initially lacked robust security mechanisms, but recent versions have introduced features like encryption, authentication, and authorization. However, implementing and managing access controls, ensuring secure data transfers, and integrating with existing authentication frameworks can be challenging. Organizations need to carefully configure and monitor security features to protect sensitive data.

- Data Consistency and Reliability: HDFS provides fault tolerance by replicating data across multiple nodes. However, ensuring data consistency across replicas and managing synchronization during data writes, updates, and reads can be challenging. In distributed environments, issues like network partitions or node failures can impact data reliability. Organizations need to implement appropriate consistency models and monitoring mechanisms to maintain data integrity.

Addressing these challenges requires a combination of technical expertise, proper planning, and continuous monitoring. Organizations should invest in skilled administrators, robust monitoring tools, and best practices for HDFS management. Regular performance tuning, security audits, and data consistency checks are necessary to overcome these challenges and ensure the smooth operation of HDFS for big data processing and storage.

Key Considerations for HDFS Implementation

When implementing HDFS (Hadoop Distributed File System), it's essential to keep in mind the following key considerations to ensure a successful deployment:

- Hardware and software requirements: Before setting up HDFS, carefully assess the hardware infrastructure, including storage capacity, network bandwidth, in-memory, and processing power. Additionally, choose the right Hadoop distribution and compatible software components to ensure seamless integration and optimal performance.

- Capacity planning and data management: Proper capacity planning is crucial to accommodate the expected data volume and growth. Evaluate the data ingestion rate, frequency of updates, and data retention period to determine the required storage capacity. Consider factors like data replication, partitioning, compression, and archiving to optimize storage efficiency and facilitate data retrieval.

- Backup and disaster recovery: Protecting data integrity and ensuring business continuity should be a priority. Establish a robust backup strategy by regularly backing up data to secondary storage or remote locations. Define backup frequency, data retention policies, and recovery procedures to safeguard against disasters and data loss.

By taking these considerations into account, you can ensure a smooth and successful implementation of HDFS. Remember to assess hardware and software requirements, plan for capacity and data management, and establish a reliable backup and disaster recovery strategy for a resilient and efficient HDFS environment.

Conclusion

In conclusion, HDFS plays a vital role in big data processing and storage, providing scalability, fault tolerance, and distributed computing capabilities. Its integration of ETL tools enhances data processing and transformation workflows, allowing organizations to unlock valuable insights from their data.

It also provides an API that allows developers to interact with HDFS programmatically. The API offers a set of functions and methods for performing real-time operations on files and directories, such as create, read, write, and delete. Developers can utilize the HDFS API to integrate real-time data processing and storage capabilities into their applications, leveraging the distributed nature of Apache Hadoop for efficient and scalable processing.

When implementing HDFS with ETL tools like Integrate.io, organizations can overcome scalability and performance challenges, ensure data consistency and reliability, and leverage the power of distributed computing. By addressing hardware and software requirements, performing capacity planning, and establishing backup and disaster recovery strategies, businesses can harness the full potential of HDFS for their data-driven projects.

As you embark on your HDFS and ETL journey, consider exploring the capabilities of Integrate.io's No-Code Data Pipeline Platform for Data Teams and Line of Business Users.

- Integrate.io seamlessly integrates with HDFS, and other big data technologies.

- It provides a user-friendly platform for automating data pipelines and replicating data across different sources.

- The platform offers a drag-and-drop interface, eliminating the need for coding.

- It supports a wide range of applications and data sources.

- Integrate.io simplifies big data processing and helps businesses make better decisions.

- No need for additional hardware or staff investment.

Don't miss out on the possibilities of combining HDFS, and Integrate.io for your data-driven projects. Explore the features and benefits of Integrate.io today with a 14-day trial and take your data processing and transformation to new heights. You can also schedule a demo with one of our experts to discuss your specific needs. Empower your organization with the tools and technologies needed to thrive in the era of big data and maximize the value of your data assets.