Data integration and migration can be quite overwhelming and complex. It's easy to underestimate the complexities of managing data between different sources and destinations. However, diving into it without thorough planning and the right ETL (Extract, Transform, Load) setup could impact your business goals and deadlines, or even exceed your budget. As data volumes continue to increase, it's crucial to approach data management with the right tools and strategies, which we’ll discuss in this article.

In this article, we’ll delve into some of the leading Microsoft SQL Server ETL tools, provide tips to optimize data integration and migration process, and discuss methods to reduce errors.

Here are 4 key methods we’ll discuss in this article to optimize data integration:

- Preparing data for migration (data cleaning, validation, mapping, and transformation)

- Incremental load, full-load, parallel processing

- Bulk loading, partitioning data, indexing strategies

- Enhancing data integration by transforming, scheduling, monitoring, and other methods

Introduction

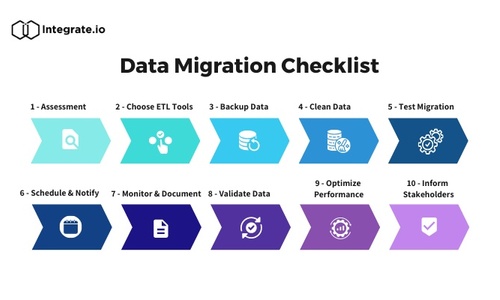

Choosing to work with ETL tools significantly improves the data migration and integration process. The process involves multiple steps, including data extraction from diverse sources and loading it into the desired destination. Automating these steps and transformations is crucial for proper and scheduled integration. This is where ETL (Extract, Transform, Load) tools play an essential role. They provide the necessary features to automate these processes and streamline the entire data integration workflow.

Many Microsoft SQL ETL tools are available like Integrate.io, Talend, Azure Data Factory, Informatica, Microsoft's own SSIS (SQL Server Integration Services), and others which we’ll be discussing later in this article.

Microsoft ETL tools are an efficient solution for companies that need to integrate data from different sources. A well-executed process reduces errors - resulting in reliable insights and improved efficiency.

Here are some key benefits of utilizing ETL tools:

- Scalability: ETL tools enable your organization to handle large volumes of data with features like auto-scaling and parallel processing. These features allow businesses to adjust to the changing workload demands over time.

- Data accessibility: ETL tools extract data, transform it into a consistent format, and store it in a data warehouse or data lake. This ensures that data is available and can be accessed whenever needed, irrespective of where it is stored.

- Cost reduction: ETL tools reduce the need for manual interference. This reduces the potential for cost-related flaws and mistakes caused by manual intervention.

- Security and governance: ETL tools provide features that ensure data security and governance. It allows you to implement proper control, comply with industry rules, and ensure data integrity, confidentiality, and privacy.

Understanding ETL in Data Migration

The data migration ETL tools play a vital role in streamlining the data migration and integration process. Here’s a quick explanation of how ETL tools achieve this:

- Data Extraction: This phase involves retrieving data from a variety of sources like databases, files, APIs, etc. The data can be in different formats (CSV, JSON, XML, etc). The ETL tools provide pre-built connectors that help you connect with various sources and handle files having varied formats.

- Data Transformation: The data retrieved from the source is not always available in a ready-to-use format. Once the data is extracted, the ETL tools help with data transformation which allows you to meet the desired data format and requirements. The transformation phase involves converting the extracted data into a consistent and meaningful format. In this step, the data is cleaned, filtered, and validated, to align it to your business needs.

- Data Loading: This final phase involves loading the transformed data into a target destination, such as a database, data warehouse, or data lake. ETL tools ease the loading process with features like batch processing, parallel loading, etc. Loading data efficiently ensures it is available for reporting, visualization, and decision-making processes.

- Workflow Management: Depending on the business and data requirements, the ETL processes need to be managed and scheduled for timely runs. ETL tools provide features with visual interfaces that help you design and schedule the data integration and migration workflows.

- Error-Handling and Monitoring: ETL tools help in monitoring the pipeline runs, and logging errors as they happen. Features like alerts, triggers, retrying failed tasks, etc. allow businesses to debug any transformation failures and minimize the possibility of any errors.

Data Migration Approach: Traditional vs ETL-Based

There is a significant difference between the traditional data migration process and the ETL-based data migration approach - the latter being notably more efficient. Let’s discuss the differences in detail:

-

Complexity

- Traditional approach involves creating custom scripts manually to move data from one source system to the target system. This approach can be complex and time-consuming - especially when the custom scripts are tailored for a specific source system.

- ETL-based approach simplifies the migration tasks by providing pre-build connectors that ease the process of connecting to the source system. The visual interface makes the extraction, transformation, and loading process efficient and quicker

-

Scalability

- Traditional approach struggles when dealing with large volumes of data. Especially when dealing with big data, the manual process can create performance issues.

- ETL-based approach ensures smooth migration even when the data volume increases. The ETL tools are built to handle large amounts of data with features like parallel processing, scalable resources, etc.

-

Data Integrity

- Traditional approach lacks data-validation features which can lead to data discrepancies in the destination.

- ETL approach overcomes these challenges by providing data validation and error-handling capabilities. Error logs and data quality checks ensure accurate data migration.

-

Automation

- Traditional approach requires significant manual involvement throughout the migration process. This makes it difficult to automate and increases the chances of human errors.

- ETL approach eases the automation and monitoring process. The ETL tools provide features for automation, reducing the need for manual intervention.

Benefits of ETL Tools

Leveraging ETL tools for data migration and integration tasks helps companies optimize their data flows and improve efficiency. Let’s explore some of the key benefits:

- Efficiency: The data extraction, transformation, and loading tasks are easily automated by using ETL tools, this helps in streamlining the migration process.

- Data Integrity: There are several steps involved in an ETL workflow - data cleaning, validating, and transformations. These steps ensure data accuracy and consistency through the data migration process. The tools help in identifying inconsistencies and discrepancies, leading to improved data quality and integrity.

- Seamless Integration: ETL tools come with hundreds of in-built connectors that allow companies to link the source data with the target database. This allows companies to integrate data from heterogeneous sources and handle different data formats.

- Flexibility and scalability: ETL workflows can be customized to meet specific business/data requirements, while also handling large volumes of data. The data transformations let companies integrate diverse data structures and formats.

- Cost-effectiveness: ETL tools reduce the need for manual effort and errors. This reduces any possibility of inducing any cost-related errors.

- Customer support: When following the traditional approach, if you run into any problems, debugging and resolving the issue can be a cumbersome process. ETL tools allow you to connect with trained specialists who can help with quicker resolution.

Top 7 Microsoft SQL ETL Tools

Microsoft ETL tools are software applications developed to extract data from different sources, transform it to meet business standards, and load it to the destination database. There are many popular ETL tools, let’s take a deeper look into the leading ones:

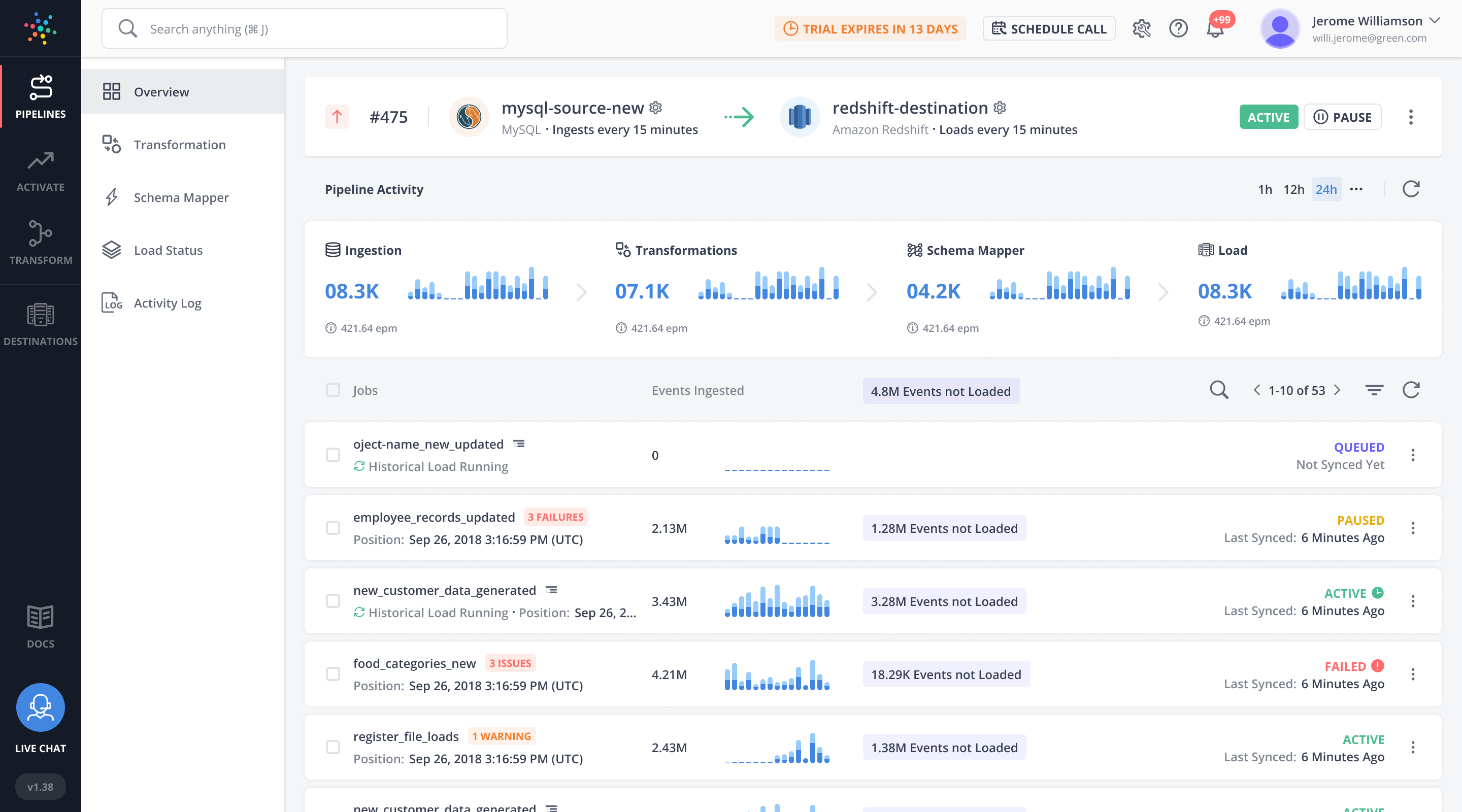

1. Integrate.io

Rating: 4.3/5 (G2)

Integrate.io is a cloud-based data integration platform that allows businesses to extract, transform, and load data from multiple sources into their Microsoft SQL databases. The platform provides advanced ETL and Reverse ETL features that help businesses send data to their Microsoft SQL server and push it back to other destination systems. Integrate.io has a rich set of built-in connectors like Snowflake, Salesforce, Netsuite, Amazon Redshift, Shopify, etc., allowing businesses to extract data from multiple sources.

Integrate.io stands out with its intuitive, user-friendly drag-and-drop interface which allows you to set up workflows and deploy pipelines quickly. Unlike other tools, using Integrate.io does not need businesses to have extensive technical training.

Key Features

- ETL & Reverse ETL

- ELT & CDC

- API Management

- Data Observability

- 100+ in-built connectors

- Over 220 data transformations

- Low-code/no-code operation

Pricing

The platform features straightforward and flexible pricing per connector used. Irrespective of the volume of data, the platform only charges a flat rate per connector.

Integrate.io features usage-based three pricing plans:

- Starter plan ($15,000 per year): Unlimited packages, transfers, and users, two connectors, and a scheduling cluster.

- Professional plan (25,000 per year): Better suited for larger organizations, 99.5% SLA, advanced security features, and two scheduling clusters.

- Enterprise plan (customized based on your needs): Best for enterprises with advanced data integration requirements. Unlimited REST API connectors, source control development, a QA account, a SOC2 audit report, and more.

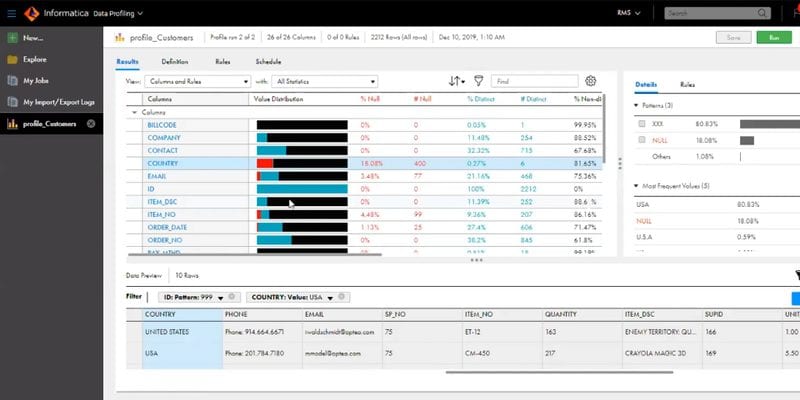

2. Informatica

Rating: 4.4 (G2)

Informatica is a versatile data integration and management system. It efficiently extracts data from diverse sources, applying necessary transformations based on specific business needs, and loading the processed data into a SQL Server database. It has a wide range of connectors catering to various on-premise data sources and cloud services.

Key Features

- ETL/ELT

- CDC

- Hundreds of connectors

- Integrations with on-premise and cloud-based tools

Pricing

Informatica’s basic plan starts at $2,000/month.

3. Azure Data Factory

Rating: 4.5 (G2)

Azure Data Factory is Microsoft’s fully managed cloud-based data integration platform that allows one to carry out complex and customizable tasks. Along with ETL and ELT capabilities, ADF also provides built-in support for Git and CI/CD. The connectors enable seamless data migration between on-premise SQL Server Integration Services (SSIS) and cloud environments.

Key Features

- No-code ETL and ELT

- 90+ built-in connectors

- GUI and scripting-based interfaces

- ETL automation and monitoring capabilities

Pricing

Microsoft Azure Data Factory offers a $200 credit for 30 days, followed by a pay-as-you-go model for further usage.

4. Talend

Rating: 4.0 (G2)

Talend is an innovative data integration solution. It offers diverse connectors, enabling you to integrate data from various sources. The intuitive user interface allows businesses to quickly build and deploy ETL pipelines. Talend automatically creates a Java code for the pipeline, eliminating the need to write codes.

Key Features

- ETL/ELT

- CDC

- Robust execution

- Metadata-driven design and execution

- A diverse set of built-in connectors

Pricing

Talend offers a 14-day free trial, further pricing details have not been disclosed.

5. Hevo Data

Rating: 4.3 (G2)

Hevo allows businesses to integrate data in near real-time. It offers 150+ connectors that enable businesses to integrate data from multiple sources. A few other notable features include real-time data integration, automatic schema detection, and the ability to handle big data.

Key Features

- ELT

- CDC

- 150+ data sources

Pricing

The free tire allows users to ingest up to 1 million records, followed by $239/month for additional features.

6. SQL Server Integration Services (SSIS)

Rating: 4.6 (G2)

SQL Server Integration Services or SSIS is a powerful Microsoft tool for ETL functions. SSIS offers easy data transformation methods that allow users to efficiently extract, transform and load data from different sources.

Although SSIS is a component of Microsoft SQL Server, it does have some limitations like steep learning curve, complexity, and limited third-party connector options, leading businesses to look for SSIS alternatives.

Key Features

- Part of Microsoft SQL Server

- SSIS package can be deployed via Visual Studio

- Built-in data transformation capabilities

Pricing

SSIS is integrated into SQL Server License, while the license cost starts from $230.

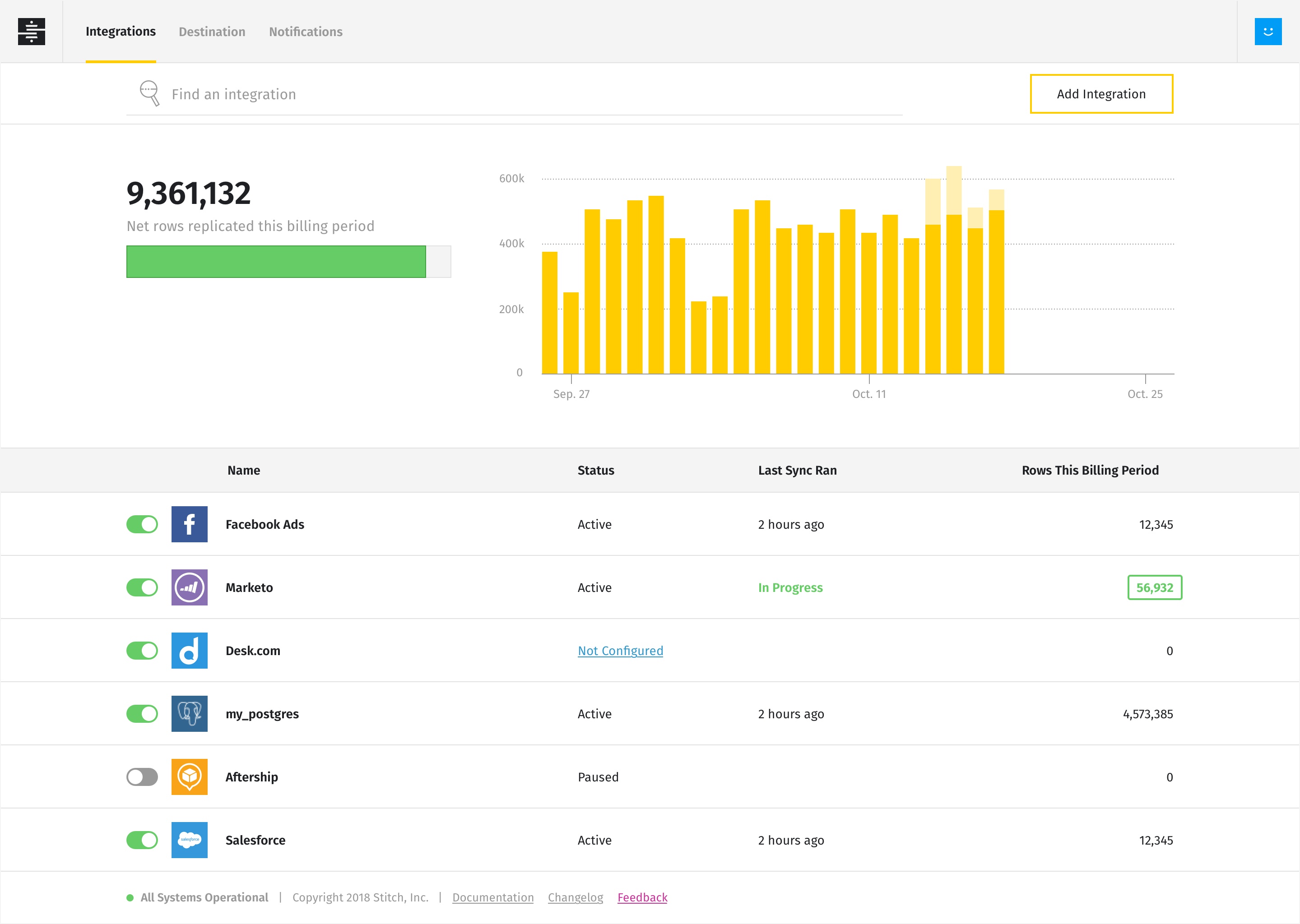

7. Stitch

Rating: 4.5 (G2)

Stitch is a no-code cloud-based ETL tool that allows users to carry out ETL functions. It offers around 130+ data source connectors that let businesses extract data from various sources. Features like scheduling and data replication eliminate the need to write custom codes.

Key Features

- ETL

- 130+ in-built connectors

- No-code tool

Pricing

Stitch offers three pricing plans: the standard one starting at $100/month (depending on the number of rows), and the premium being $2500/month.

Best Practices for Optimizing Data Migration

Preparing data for migration

Data Cleansing and Validation

Data extraction is an integral phase of an ETL process. Data cleansing and validation are vital in ensuring data completeness and consistency.

Data cleaning involves identifying any inconsistencies or inaccuracies in source data and correcting them. It can be removing duplicate records, filling null values, and standardizing formats. This ensures data reliability.

Data validation includes verifying data quality. This can be done by pre-defining certain rules and constraints to test the data quality. This step helps identify data discrepancies and ensure data integrity.

Data mapping and transformation

The relation between the source data and destination structure must be established early to ensure seamless integration. To align the source data and destination structure, the data needs to be mapped or transformed into the corresponding fields or attributes.

ETL tools provide various transformations that can be applied to the source data. This can include multiple operations such as filtering, aggregating, joining, or splitting. This step allows businesses to make sure the data is in alignment with their business needs.

Efficient data extraction techniques

Incremental load vs Full load

In the incremental load process, only the most recent changes are extracted from the source data and integrated into the destination. Since this approach only considers incremental changes, it is efficient and faster, especially when dealing with big data.

On the other hand, the full-load method considers the complete source data integration every time, irrespective of the recent changes. This method ensures data completeness, but also becomes time-consuming and resource-heavy.

Parallel processing

Parallel processing refers to the simultaneous execution of multiple tasks in data pipelines to integrate data from source to destination. This process significantly speeds up the data integration process by dividing the data and tasks into smaller units which are processed in different threads or processors.

Performance optimization during data loading

Bulk loading

The bulk loading method involves loading data in batches, greatly improving the integration process. When large amounts of data are involved, loading a set of rows or batches of data improves the data loading performance.

Indexing strategies

Indexing the data allows the database to quickly locate and retrieve the records. This helps in improved query execution speed. This plays a crucial role in ETL process optimization. Here are a few indexing methods:

- Primary key indexing: a primary key is a unique row identifier for each row in a table which helps in quick querying of data.

- Foreign key indexing: a foreign key establishes a link between structures by linking the primary key of one table to the foreign key of another table. This helps in efficient data joins and mapping.

- Column indexing: This process includes indexing a particular column in the data structure. This allows faster data retrieval for frequently queried columns.

- Clustered indexing: Clustered indexing works by determining the physical order of the rows in the table. The ordered data can be quickly retrieved in the ETL process.

Partitioning data

Partitioning data involves dividing the datasets into smaller partitioning based on specific criteria like data range, list, or hash. By distributing the workload based on a set of criteria, the database engine efficiently queries the data, as compared to a complete table scan otherwise.

Ensuring data integrity and error-handling

Data quality checks

Data quality checks are integral to the data integration and migration process. These checks ensure the data is complete, consistent, and reliable. If any flaws or errors in the data, these checks help identify the problems early on.

Here are some key aspects:

- Data profiling: this involves examining the source data structure, quality, and patterns to check for any anomalies or discrepancies.

- Data cleansing: removing duplicates, filling or removing null values, standardizing data formats, etc.

- Data validation: having a pre-determined set of rules and constraints to test the source data.

- Data consistency and accuracy: checking for data consistency among related source structures, and validating the data correctness to ensure it reflects the business requirements.

Monitoring and Error logging

Monitoring ETL data pipelines in the data migration and integration process plays a crucial role in ensuring smooth execution and data flow. ETL tools like Integrate.io, Azure Data Factory, etc. provide inbuilt features that allow you to set up custom notifications during the pipeline run. This helps keep a watch on potential issues.

Error logs and alerts help identify when a particular ETL data pipeline component fails. The error logs help in debugging and resolving the errors. ETL tools provide error-handling mechanisms, allowing you to retry the failed component.

Conclusion

In case you skipped the above, here’s a quick overview of the advantages of using SQL Server ETL tools for ETL migration and best practices to optimize data integration and migration process:

Key advantages of using SQL Server ETL Tools:

- Streamlined Data Integration

- Enhanced Efficiency

- Data Quality, Integrity, Governance, and Security

- Scalability and Flexibility

- Comprehensive Monitoring and Error-Handling

Best ETL practices

- Perform data cleansing and validation to ensure completeness and consistency.

- Establish the relation between source data and destination structure through mapping or transformation.

- Consider incremental load for faster processing of recent changes.

- Evaluate the trade-offs of full load for complete data integration.

- Use bulk loading to improve integration performance for large data volumes.

- Implement indexing strategies (e.g., primary key, foreign key, column, clustered) for faster data retrieval.

- Divide datasets into smaller partitions based on specific criteria for efficient querying.

- Conduct data quality checks (like profiling, cleansing, and validation) to ensure reliable data.

- Monitor and log errors for prompt debugging and resolution.

- Set up custom notifications for monitoring ETL pipelines during execution.

- Utilize error logs and alerts to identify and address failures in the pipeline.

By now, you are in a position to choose the right data migration ETL tool for your business needs and understand the best practices to integrate or migrate data and optimize the ETL process.

If you’re looking for a quick-to-set-up and easy-to-operate ETL tool, consider choosing Integrate.io. With its drag-and-drop components, data transformation methods, quick customer support, and simple pricing model, it takes care of all your data needs.

Experience streamlined data integration and migration with Integrate.io.

Sign-up now for a 14-day trial or schedule a demo and explore Integrate.io’s benefits firsthand!

FAQs

Q: Is Microsoft SQL Server an ETL tool?

No, Microsoft SQL Server itself is not an ETL tool. However, it includes SQL Server Integration Services (SSIS), which is a powerful ETL tool.

Q: What is the ETL tool in SQL?

The primary ETL tool associated with SQL Server is SQL Server Integration Services (SSIS).

Q: Is SSIS an ETL tool?

Yes, SSIS (SQL Server Integration Services) is an ETL tool used for extracting, transforming, and loading data.

Q: Is SSRS an ETL tool?

No, SSRS (SQL Server Reporting Services) is not an ETL tool. It is used for creating and deploying reports from data that has already been loaded into a database.