Picture this: during a bustling holiday season, a global e-commerce giant faces a sudden influx of online orders from customers worldwide. As the company's data pipelines navigate a labyrinth of interconnected systems, ensuring the seamless flow of information for timely product deliveries becomes paramount. However, a critical error lurking within their data pipeline goes undetected, causing delays, dissatisfied customers, and significant financial losses. This cautionary tale underscores the indispensability of data pipeline monitoring, an essential practice that safeguards against such mishaps and unlocks the full potential of data integration.

5 key points about data pipeline monitoring:

- Monitor and identify bugs in data pipelines effectively by utilizing the right monitoring tools, such as logging frameworks and observability systems.

- Gain valuable insights into your data pipelines through data visualization tools, enabling you to analyze latency, error rates, data throughput, and other crucial aspects.

- Achieve data integration objectives by consistently reviewing and optimizing your data pipelines, ensuring seamless data flow and high-quality outcomes.

- Leverage the power of ETL/ELT methodologies to enhance data pipeline monitoring, allowing you to observe data and ensure its accuracy during the transformation stage.

- By combining robust monitoring tools, data visualization, continuous review, and ETL/ELT techniques, you can establish a comprehensive data pipeline monitoring strategy that maximizes efficiency and drives data excellence in your organization.

Remember, implementing a comprehensive data pipeline monitoring strategy empowers organizations to optimize data integration, make informed decisions, and drive data excellence in today's data-driven landscape.

In this article, we will discuss how data pipeline monitoring can help you optimize your data integration solutions and options for choosing the right monitoring tool for your business.

Why Should You Monitor a Data Pipeline?

Data pipeline monitoring is a critical practice that ensures the successful flow of data between source and destination for comprehensive analysis. By actively monitoring data pipelines, organizations can maintain data quality, mitigate the risk of user errors, eliminate OCR data inaccuracies, and preempt other bugs that might impact crucial analysis processes.

In an era where data governance frameworks like GDPR and CCPA demand stringent adherence, monitoring data quality as it traverses various locations becomes a strategic imperative to avoid hefty government fines. Furthermore, proactively monitoring pipelines bolsters security measures, preventing unauthorized access to sensitive data and fortifying the organization's defenses against potential breaches.

How Do You Monitor Data Pipelines?

Monitoring data pipelines encompasses a range of effective strategies and tools, which you'll learn about below. Some of the most common methods include using ETL/ELT for pipelines, investing in monitoring and data visualization tools, and continuously reviewing pipelines.

Determine Your Objectives and KPIs for Data Pipeline Monitoring

Image source: http://ak.vbroek.org/project/data-pipeline-graphic/

Before embarking on data pipeline monitoring, it is crucial to define clear objectives aligned with the organization's specific needs. For instance, compliance with data governance frameworks like GDPR and CCPA may drive the need for monitoring data quality. Failure to adhere to these principles can result in expensive fines from the government, so it's important to track data quality as it moves from one location to another.

Organizations must also identify relevant Key Performance Indicators (KPIs) to measure the efficacy of their data pipeline monitoring efforts.

These KPIs can include:

- Data latency, which monitors how long it takes data to move through your pipelines

- Availability, which monitors the amount of time your pipelines function correctly

- Utilization, which monitors how many resources your pipelines use at any given time, including CPU and disk space

The KPIs you choose will depend on various factors, such as your objectives for data pipeline monitoring, the monitoring tools you use, and the type of pipelines you have created — ETL/ELT pipelines, event-driven pipelines, streaming pipelines, etc.

By aligning objectives with specific KPIs, organizations can effectively track and improve their data integration workflows.

Choose the Right Monitoring Tools

After defining your objectives and KPIs for data pipeline monitoring, you should choose the right monitoring tools. This software tool gathers information about the functionality and overall health of the data pipelines in your organization, allowing you to identify any bugs and ensure everything is running smoothly. Monitoring tools include the following:

- Logging frameworks

- Observability tools

- Data visualization tools

Learn more about all of these software types below:

Logging frameworks

Logging frameworks collect log data from the various components involved in data pipelines, such as data sources and target systems. These tools generate logs for individual data pipeline jobs and record the different events that can impact pipelines, such as user errors. The best logging frameworks collect log data in real time, allowing you to identify bugs as they happen and the root cause of these errors.

Observability tools

Observability tools also collect data from different pipeline components but provide a more holistic and comprehensive overview of the entire pipeline, including where the pipeline stores data and how data processing takes place. These tools also provide insights into how to use data to optimize business intelligence workloads. The best observability tools use artificial intelligence, machine learning, predictive analytics, and other methods to generate accurate data insights that provide intelligence about pipelines for your team.

Data visualization tools

Data visualization tools display different metrics and KPIs from logging frameworks and observability tools, helping you generate insights about your data pipelines. A data visualization tool might present data sets in the form of graphs, charts, reports, and dashboards, helping you better understand your data pipeline workflows.

The above list of monitoring tools is not exhaustive and some software might have features that overlap with other technologies. For example, the best observability tools also have data visualization capabilities, allowing you to observe and then analyze pipelines. You can find open-source and commercial logging frameworks, data observability tools, and data visualization tools on the market.

As well as helping you create error-free and successful data pipelines, Integrate.io generates data observability and monitoring insights and sends you alerts when an error or other event occurs. For example, you can receive custom notifications when something goes wrong in your pipeline and take action to preserve data quality.

Continuously Review Your Data Pipelines

Effective data pipeline monitoring is not a one-time task but an ongoing process. Monitoring isn’t just beneficial when creating pipelines. You should continue to monitor data as it flows from one location to another so you can comply with data governance guidelines, avoid bottlenecks, generate valuable business intelligence, and improve data integration workflows. Continuous monitoring also involves tracking your pipelines as your business scales. As more big data trickles through your organization, you need pipelines that successfully move that data to the correct location.

One of the best ways to review data pipelines is to document how those pipelines perform over time and share these results with your data engineering team. Learning how pipelines automate data integration and move data between a source and a destination, such as a data warehouse or data lake, can help you create even more successful pipelines in the future. Regularly testing data pipelines is also important to ensure data moves to the correct location without any hiccups.

Using ETL/ELT for Data Pipeline Monitoring

You can create data pipelines in multiple ways — via data streaming, batch processing, event-driven architecture, etc. — but Extract, Transform, Load (ETL) and Extract, Load, Transform (ELT) can help you monitor those pipelines too. Take ETL, for example:

- ETL extracts data from a data source such as a customer relationship management (CRM) system or transactional database and places it into a staging area.

- Now, the transformation stage takes place. This process involves data cleansing and validation and guaranteeing data is accurate and in the correct format for analytics. At this point of the pipeline, you can monitor data and discover any issues with the way your pipeline runs.

- Finally, ETL loads data to a warehouse or another central repository like Amazon Redshift, Google BigQuery, or Snowflake.

Depending on your use case, you can use ELT to move data to a new location for analytics instead. This data integration method reverses the "transform" and "load" stages of ETL, with transformations taking place inside the central repository. However, you can still monitor your pipeline during the transformation stage and ensure data is consistent, clean, and compliant.

How Integrate.io Can Help With Monitoring and Debugging Your Data Pipeline

Monitoring your pipelines is critical for ensuring data quality and reducing the chances of errors that might impact analysis. However, as you can see above, the process of tracking your pipelines can be difficult. You will need to determine your objectives for monitoring, choose the right KPIs, invest in the right tools, and regularly monitor and document your pipelines.

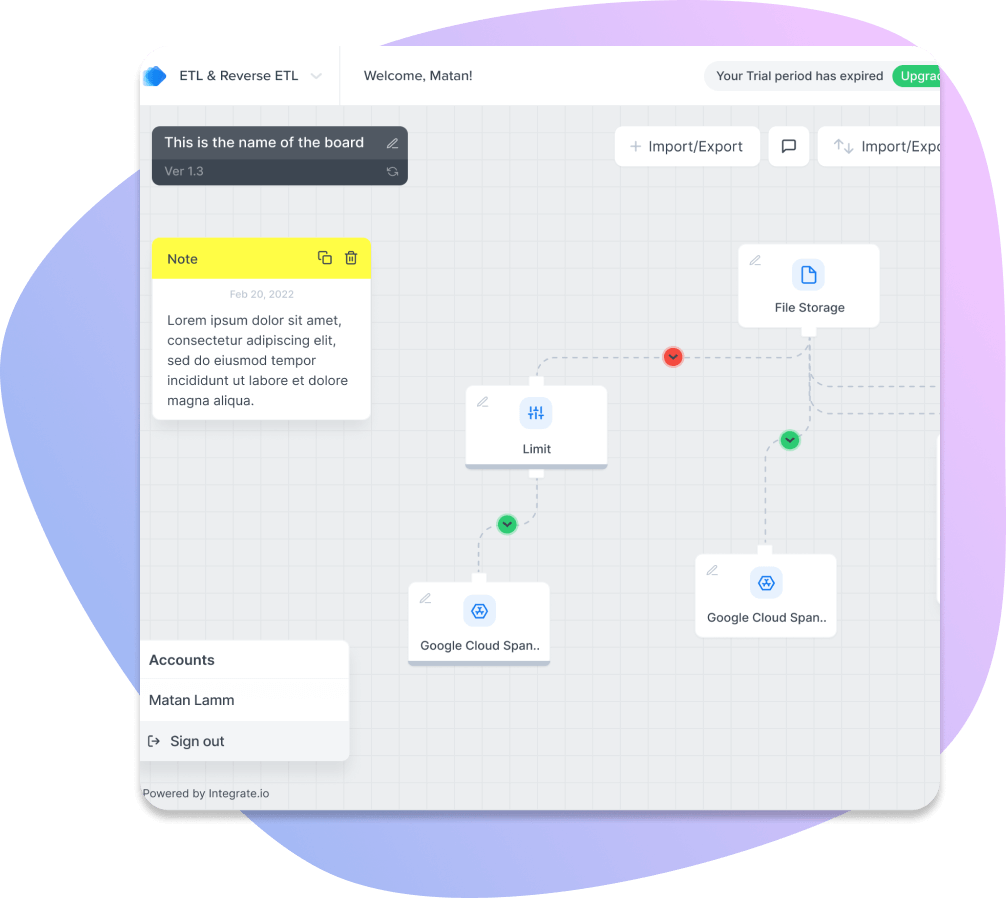

Integrate.io automates the data pipeline process with more than 200 no-code data connectors that sync data between different sources and locations, removing the need for data engineering. No longer will you need experience in a programming language like SQL or Python to execute the pipelines you need. Integrate.io also provides data observability insights that can help you monitor and identify issues with your pipelines. You’ll receive instant custom notifications when data problems occur, allowing you to improve the quality of your data sets.

Integrate.io's easy-to-use drag-and-drop interface

Integrate.io also offers the following benefits for data integration:

- Create ETL, ELT, CDC, and Reverse ETL pipelines in minutes with no code via Integrate.io’s powerful drag-and-drop interface.

- Benefit from the industry’s fastest ELT data replication platform, unify data every minute, and create a single source of truth for reporting.

- Design self-hosted and secure REST APIs with Integrate’s API management solutions. You can instantly read and write REST APIs and get more value from your data products.

Now you can unleash the power of big data with a 14-day free trial. Alternatively, schedule an intro call with one of our experts to address your unique business use case. Our team will identify your challenges and discuss solutions one-on-one.

Final Thoughts

In conclusion, data pipeline monitoring is an indispensable practice for organizations seeking to optimize data integration efficiency and harness the full potential of their data assets. By implementing a robust monitoring framework, defining clear objectives and KPIs, selecting the right tools, continuously reviewing pipelines, and leveraging methodologies like ETL/ELT, businesses can ensure seamless data flow, comply with regulations, and unlock valuable business insights. Explore the diverse array of monitoring tools available, including options like Integrate.io, to empower your organization with streamlined, error-free data pipelines and achieve data excellence in today's data-driven landscape.

Remember, data pipeline monitoring is an ongoing process, and staying proactive is the key to success. Embrace the power of monitoring, debugging, and optimizing your data pipelines to unleash the full potential of your organization's data-driven endeavors.