For a CTO, full-stack engineer, or systems architect, the question isn’t so much what is possible with Amazon Redshift, but how. How do you ensure optimal, consistent runtimes on analytical queries and reports? And how do you do that without taxing precious engineering time and resources?

Extract, Transfer, and Load (ETL) is a tried-and-true process for moving data into warehouses like Redshift for analytics and reporting. But not all ETL processes are created equal. Poor ETL practices can lead to longer runtimes and inconsistent results.

Therefore, it’s critical to the health of your business to develop the most efficient and robust Redshift ETL infrastructure.

This guide will cover everything you need to know about building and maintaining your Redshift ETL pipeline. We’ll cover ETL best practices and advanced tips, whether to build or buy your Redshift ETL pipeline, and tools to help you achieve the best results.

But first, let’s look at why Amazon Redshift is the preferred cloud warehouse for so many fast-growing, data-driven organizations.

Table of Contents

5 Reasons Why Businesses Love Amazon Redshift

Setting up Amazon Redshift ETL: Best practices and advanced tips

Redshift ETL Best Practices: Faster, better, cheaper

Advanced Tips for Redshift ETL

5 Reasons Why Businesses Love Amazon Redshift

Amazon Redshift is one of the most popular data warehouses in the world and for good reason. Not only is it incredibly powerful, but flexible and easy to use as well.

Here are 5 reasons why Redshift rocks:

1. Ease of Use

Redshift is incredibly intuitive if you’re familiar with SQL-based commands. That’s by design. Redshift can be easily scaled up or down, and many administrative tasks can be automated through their wide-range of scripts. When you spend less time maintaining your data warehouse, you have more time to develop analytics.

2. Cost Efficiency

Redshift pricing is extremely customizable, so you only pay for what you need.

Compared to legacy data warehouses, Redshift provides the perfect blend of startup affordability and massive cost efficiencies at scale. It offers two different pricing models: on-demand and reserved instance pricing. You can also optimize your costs with Redshift’s region-based and node-based pricing options.

3. Configuration and Management

Once you set your schemas and provisions, Redshift handles provisions, configuration, and patching on its own. It offers efficiency and performance gains by automating the most time-consuming activities, like replication and backup to Amazon S3. Scaling your Redshift cluster can be completed with just a single API call or through the intuitive AWS management console.

4. Harnessing the Amazon Ecosystem

One of the biggest benefits of Redshift is utilizing the massive ecosystem that surrounds it. As the world’s leading cloud platform, AWS offers an unparalleled number of integrations with your favorite tools.

The synergy created in this ecosystem translates to real dollars and cents. For example, if you use AWS and Redshift, you also get access to Redshift Spectrum, which allows you to expand your analytical processing (using Amazon S3) without adding nodes.

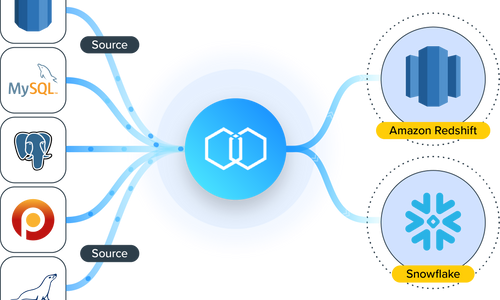

5. Expansive and simple ETL connections

Finally, let’s bring it back to the purpose of this article. Redshift offers a wide variety of ETL solutions, from open-source setups to dedicated Redshift ETL-as-a-Service providers. Redshift also enables you to connect virtually any data source.

The bottom line is this: You can’t go wrong with Amazon Redshift. From one-person startups to Fortune 10 corporations, Redshift has the power and flexibility to power your data analytics program.

We'll handle your ETL pipeline.

Setting up Amazon Redshift ETL: Best practices and advanced tips

Redshift is a world-class data warehouse. But it’s only as good as your ETL process allows. If your data flow into Redshift is slow, inconsistent, or unreliable, your analytics will be unusable.

So, how do you build and maintain the optimal Redshift ETL process? First, let’s look at the best practices as defined by AWS itself. Then we’ll show you advanced tips to take your ETL pipeline from good to great.

Redshift ETL Best Practices: Faster, better, cheaper

After basic setup and configuration, Amazon Redshift can essentially run on its own. But over time, poor ETL hygiene can lead to slower runtimes and higher costs.

Follow these best practices — recommended by AWS — to ensure your Redshift cluster is operating at peak performance.

1. COPY data from multiple, evenly sized files

As a massive parallel processing (MPP) database, Amazon Redshift analyzes data across multiple nodes at once. Each node is subdivided into smaller sections called slices.

The number of nodes and slices-per-node varies, but one fact never changes: Your runtimes are only as fast as your slowest slice. That’s why it’s critical to distribute the data load across your nodes and slices as evenly as possible.

2. Optimize workload management

Use Redshift’s Workload Management (WLM) to create separate “driving lanes” — or queues — for different types of processes. For example, create separate, dedicated queues for ETL process and reporting queries.

Some processes are more resource-intensive than others. Setting up different queues ensures that commit-intensive processes, like analytical queries, don’t bog down runtimes for simpler processes, like transactional queries.

There are several best practices for optimizing workload management. First, limit the number of concurrently-running queues to 15. Then configure your WLM so that queries claim extra available memory at the time of running. These simple steps enable every query to process with the maximum available resources.

3. Perform regular table maintenance

Due to Redshift’s ability for rapid data transformation, tables and rows are constantly being created and deleted. However, even when these spaces become unused, they are not actually deleted, but simply ‘marked’ for deletion.

If too much space is taken up by old tables and rows, things can get messy inside your cluster. This ultimately leads to slower runtimes.

You can avoid this fate by using the VACUUM and ANALYZE functions on a regular basis. These tools help you identify and remove unused rows and tables. To find a complete list of tables that need VACUUMing, use the Redshift Util’s table_info script.

4. Perform multiple transformation steps in a single transaction

Many ETL transformation processes require multiple steps. Since each COMMIT is a resource-intensive function, running each step on its own can increase runtime and eat up CPUs.

Instead, use a bracket in your process with a BEGIN...COMMIT statement to run the entire process at once. Here’s an example from AWS:

BEGIN;

CREATE temporary staging_table;

INSERT INTO staging_table SELECT .. FROM source (transformation logic);

DELETE FROM daily_table WHERE dataset_date =?;

INSERT INTO daily_table SELECT .. FROM staging_table (daily aggregate);

DELETE FROM weekly_table WHERE weekending_date=?;

INSERT INTO weekly_table SELECT .. FROM staging_table(weekly aggregate);

COMMIT;

Some ETL-as-a-Service tools, like Integrate.io, will automatically take care of this step for you.

5. Load data in bulk

Redshift is designed for massive amounts of data. Take full advantage by loading data in bulk. Redshift utilizes the unlimited scalability of Amazon S3 to make it simple to COPY large amounts of data into Redshift quickly.

Before COPYing large amounts of data directly into Redshift, accumulate the data from all of your sources into an S3 bucket. Then use temporary staging tables to hold the data for transformation. Then you can perform the bulk COPY operation. Afterwards, the temporary staging tables will be dropped, but not completely deleted (See Tip #3).

If you’re using an ETL-as-a-Service tool like Integrate.io, this action is managed for you. Simply identify your sources and Integrate.io will handle the rest.

6. Use UNLOAD to extract large file sets

You have two options for extracting data from Redshift: SELECT and UNLOAD. SELECT is optimal for small data sets, but it puts most of the load on the leader node, making it suboptimal for large data sets. SELECT also extracts the files sequentially. This puts stress on your entire cluster if the file set is too large.

The UNLOAD function, on the other hand, is designed for extracting large file sets. Again, UNLOAD utilizes S3 to lighten the load on your Redshift cluster. UNLOAD writes data in parallel to multiple files at once (following Best Practice #1). This allows all compute nodes to work together to offload the file set.

7. Use Redshift Spectrum to manage spikes in data volume

Source: Amazon AWS

Source: Amazon AWS

Spectrum is one of the most valuable features on Redshift. It provides virtually unlimited scalability of your computing power — without adding additional nodes. During spikes in data volume, you can use Spectrum to perform complex, SQL-based queries on data directly in S3. You can then combine the results with your data already in Redshift.

Redshift Spectrum solves one of the biggest problems with Redshift early on. Unlike Hadoop, Redshift had a firm upper limit in terms of scale (100 nodes and 16TB of storage per node). Spectrum makes scalability virtually unlimited.

8. Monitor ETL health daily

Monitoring the health of your Redshift cluster will ensure you catch performance issues before they impact your work. Redshift offers a repository of diagnostic queries to run.

For example, if COPY commands are taking longer to execute than usual, use copy_performance.sql to see COPY command statistics over the past several days. This will allow you to determine if you’re following COPY best practices or if your clusters need to be resized.

Advanced Tips for Redshift ETL

Like many great things in life, Redshift is simple to learn and difficult to master. Here are a few advanced tips to get the most out of your Redshift ETL process.

Optimizing UPSERT performance

UPSERT is the command used when merging new records with existing records. Redshift recommends using a staging table to complete the merge. This is a command you’ll use often. If not run correctly, though, you could experience performance issues.

After investigating a particular UPSERT command that took 10 minutes to run with just one record, we discovered some interesting things:

=> select xid,substring(querytxt,0,32),starttime,endtime from stl_query where xid = 7345326 order by starttime sac;

xid | substring | starttime | endtime

--------+----------------------------------------------------+---------------------

7345326 | COPY ANALYZE users_staging | 2014-07-31 18:19:55 | 2014-07-31 18:19:57

7345326 | analyze compression phase 1 | 2014-07-31 18:19:57 | 2014-07-31 18:19:57

7345326 | analyze compression phase 2 | 2014-07-31 18:19:57 | 2014-07-31 18:20:09

7345326 | analyze compression phase 1 | 2014-07-31 18:20:09 | 2014-07-31 18:20:09

7345326 | analyze compression phase 2 | 2014-07-31 18:27:43 | 2014-07-31 18:27:44

...(repeat analyze compression phase 1 & 2)...

7345326 | copy users_staging("id"," | 2014-07-31 18:27:44 | 2014-07-31 18:27:45

7345326 | padb_fetch_sample: select co | 2014-07-31 18:27:45 | 2014-07-31 18:27:45

7345326 | padb_fetch_sample: select * | 2014-07-31 18:27:45 | 2014-07-31 18:27:45

7345326 | UPDATE users SET "id" = s.id | 2014-07-31 18:27:45 | 2014-07-31 18:27:48

7345326 | INSERT INTO users SELECT s.* | 2014-07-31 18:27:48 | 2014-07-31 18:27:48

(170 rows)

As you can see, the bottlenecks were the COPY ANALYZE and ANALYZE COMPRESSION commands. These commands run automatically when you try to COPY against empty tables (in this case, the temporary staging tables). We did not intend to run them in this UPSERT statement.

COPY ANALYZE and ANALYZE COMPRESSION are useful when bulk-loading new data, but not necessary when copying to temporary staging tables.

To solve the problem, we added COMPUPDATE OFF and STATUPDATE OFF options to the COPY command for loading data to a staging table. These commands allow you to skip COPY ANALYZE and ANALYZE COMPRESSION.

-- Load data into the staging table

COPY users_staging (id, name, city)

FROM 's3://.......'

CREDENTIALS 'aws_access_key_id=xxxxxxx;aws_secret_access_key=xxxxxxx'

COMPUPDATE OFF STATUPDATE OFF;

This simple fix improved our UPSERT performance from 10 minutes to just 18 seconds. Not bad!

Importing non-Western language data into Redshift

Western languages like English, Spanish, Italian, German — along with individual letters in Greek, Russian, Hebrew, Turkish and Arabic — are sometimes referred to as “single-byte” languages. They can be encoded using ASCII characters. If you’re using one of these languages, you can use CHAR columns when importing data into Redshift. This is the default in Redshift.

However, if you’re loading data written in any other language, like Mandarin, Japanese, or Hindi, you will receive an error like this:

ERROR: Load into table 'alex_test_table_char' failed. Check stl_load_errors system table for details.

And your stl_load_errors will say:

err_code | err_reason

----------+------------------------------------------------------------------------------------------------------

1220 | Multibyte character not supported for CHAR (Hint: try using VARCHAR). Invalid char: e6 97 ad

In these cases, you will need to use a VARCHAR column, which supports UTF-8 characters. UTF-8 can encode virtually every language on earth — over 120,000 characters, 120 scripts, and multiple symbol sets.

Connecting datasets with JOIN

Given the interconnectivity of analytical datasets, it’s no wonder that JOIN is one of the most common clauses used in Redshift. However, as you connect more and more datasets with JOIN, it can slow down your query processing and muddy up your code.

The key is to balance the simplicity and complexity. First, consider two of the most popular JOIN clauses: LEFT OUTER JOIN and INNER JOIN. While INNER JOINs often deliver cleaner results with less work, they provide less detail into the JOINed dataset.

LEFT OUTER JOINs require more work upfront, and the results can get cluttered if you’re not careful. However, the outcome is often worth it: Deeper insights and more interesting data.

Redshift ETL: Build or Buy?

Whether to build or buy your Redshift ETL pipeline is the great debate among developers and architects. As with many great debates, the answer is, “It depends.”

So how do you decide whether to build or buy your Redshift ETL solution? Here are a few factors to consider:

When to Build your ETL pipeline

Building your ETL pipeline for Redshift has a few key benefits.

First, you can completely own and customize your ETL solution. If you have very specific needs for your data movement — and you can’t find an off-the-shelf solution to solve them — then building your own ETL would be your best choice.

You should also consider building your own ETL pipeline if you have very simple or temporary data analytics needs. For example, if you’re planning a one-time data dump into Redshift, it would be less expensive to use open-source ETL tools, and you wouldn’t have to worry about maintenance.

When to BUY your ETL pipeline

If you want to spend less time building and maintaining your ETL — and more time on actual data analytics — then it’s better to buy an off-the-shelf ETL solution.

Many companies start out trying to build their ETL pipeline, only to switch to an off-the-shelf solution. In fact, over 50% of Integrate.io customers tried building their own ETL pipeline before switching.

It’s not that these organizations can’t build their own pipeline — it’s just not worth their time and developer resources. If you can find an ETL-as-a-Service that meets your needs, you can free up your DevOps team to focus on more mission-critical projects.

We'll handle your ETL pipeline.

Top Redshift ETL Tools

Thanks to Redshift’s popularity around the world, you have plenty of options for ETL tools. However, some deliver faster results and better ease-of-use than others. After all, the point of buying an ETL tool is to speed up and simplify your data analytics. It’s important to choose the right tool.

Let’s look at a few of the most popular Redshift ETL tools:

Integrate.io

Integrate.io is a cloud-based data warehouse integration company with a suite of product offerings. One of these offerings is an ETL tool that easily unites multiple data sources. The ETL platform offers a simple, intuitive visual interface for building data pipelines between a large number of sources and destinations.

While Integrate.io supports both ETL and ELT workflows, their powerful on-platform transformation layer makes Integrate.io an ideal choice for customers looking to take the traditional ETL approach.

Integrate.io is somewhat unique on this list in that it supports sending data to all major data warehouses as well as databases and Salesforce. This flexibility allows Integrate.io to scale well across many different teams within a given company.

When it comes to security, the ETL approach is definitely the more secure, giving the customers complete control over their data. Integrate.io is SOC 2 compliant and offers a Field Level Encryption feature to ensure customers can adhere to compliance requirements and best practices.

AWS Glue

Glue is the ETL service provided by Amazon. It’s a powerful and reliable tool, but it only connects with Amazon data sources (S3, Amazon RDS, DynamoDB) and Java Database Connectivity-accessible (JDBC) sources like Oracle DB.

If you want to connect other data sources, you’ll need to use open source tools like Apache Kafka and Kinesis Data Streams. Still, Glue is a cost-effective option for companies with developer resources to spare.

The Open Source ETL Stack

If you want to take a stab at building your own ETL pipeline with open source tools, here’s where to start:

- Apache Kafka: Distributed data streaming platform.

- Kafka Connect: A tool for reliably streaming data between Apache Kafka and other systems at scale.

Fivetran

Fivetran is another ETL-as-a-Service that replicates data to Redshift, Snowflake, DataBricks, Panoply, and BigQuery. While popular, Fivetran gets critiqued for its low ease-of-use and cryptic credit system.

StitchData

StitchData is another ETL-as-a-Service. Founded in 2016, they were recently purchased by Talend. Stitch is a developer-focused tool for rapidly moving data. Stitch is built on top of Singer open source ETL framework. It provides a rich collection of community-driven integrations. If you can’t find the necessary integration, you can always create it yourself. Otherwise, order its development from the implementation partners. However, Stitch can be difficult to learn for non-technical users.

Alooma

Once a popular Redshift ETL tool, Alooma was recently purchased by Google and now only supports BigQuery.

Do More with Redshift ETL

Amazon Redshift is the premier data warehouse for unlocking data-driven insights quickly. The big question for developers and architects is, “How do we get all of our data into Redshift quickly and reliably?”

All of the answers are in the guide above. Redshift is simple to learn but challenging to master. However, with a little research and hands-on experience, you will have a robust ETL pipeline running in no time.

For some companies, building their own ETL pipeline makes sense. For most, however, buying an off-the-shelf ETL pipeline is the best use of your limited time and resources.

Integrate.io is the preferred Redshift ETL tool for developers and architects that value speed, reliability, and ease-of-use. Integrate.io's straightforward pricing and world-class support make switching a simple choice.