Low-Code Data Pipelines

& Transformations

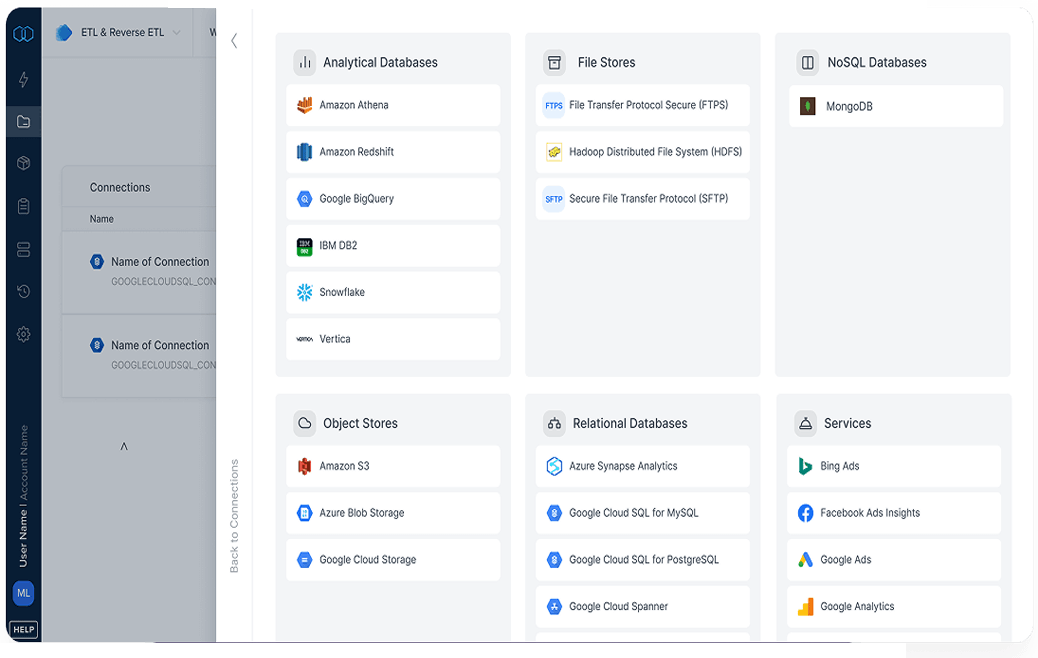

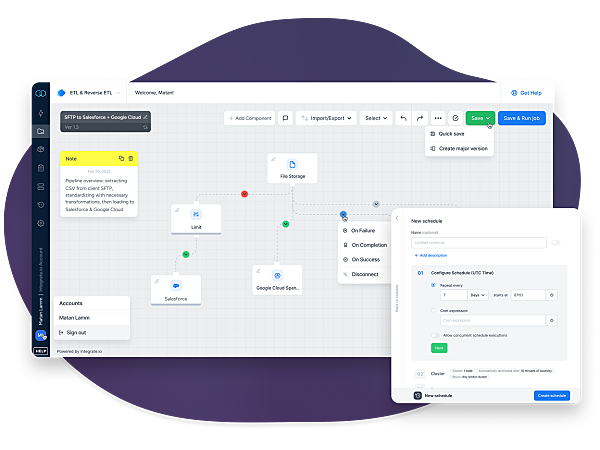

Automate data processes with our powerful drag-and-drop interface and 220+ data transformations

-

Customer Since:

May, 2023 -

Customer Since:

July, 2018 -

Case Study

Customer Since:

August, 2019 -

Customer Since:

November, 2017 -

Customer Since:

December, 2021 -

Case Study

Customer Since:

Customer Since:

January, 2025 -

Customer Since:

September, 2017 -

Customer Since:

March, 2022

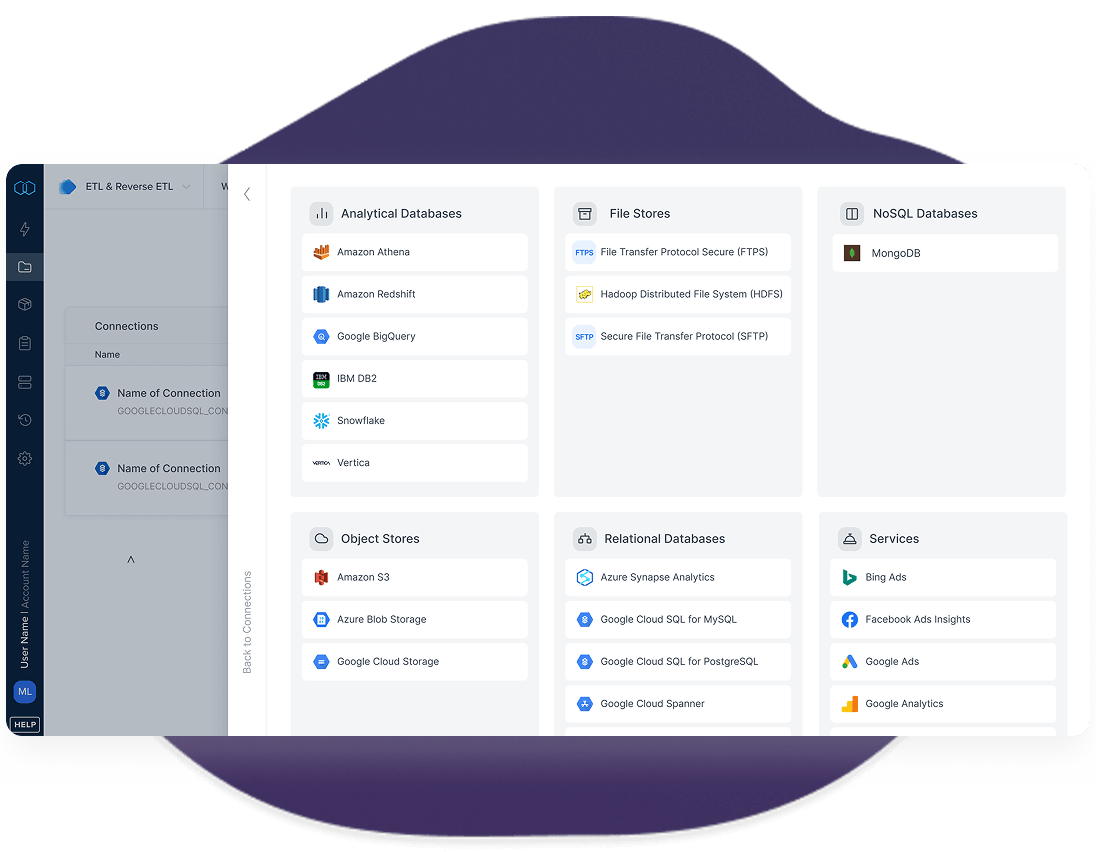

Platform Capabilities

For Operational ETL

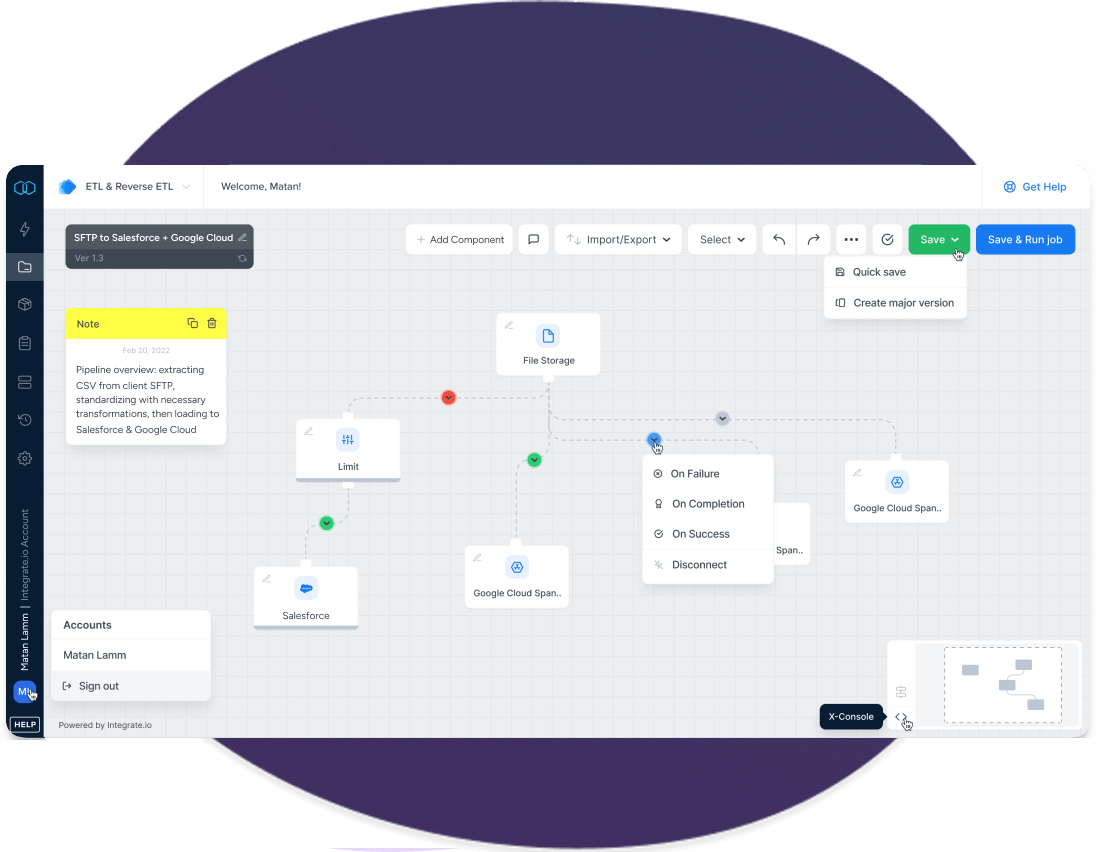

True Low-Code Platform

Every platform claims to be ‘low-code’ nowadays, but few actually are. Our solution was built for ease of use, allowing both technical and non-technical users to easily build and manage data pipelines. Empower your non-technical users!

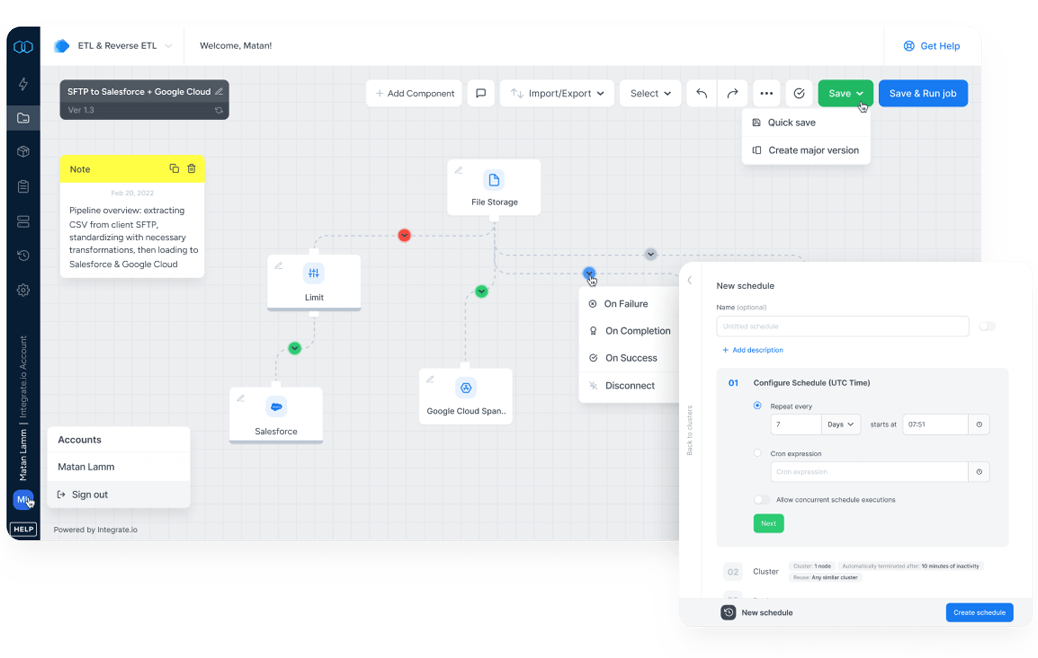

Streamline Business Data Processes

We excel at Operational ETL use cases, helping teams streamline manual data processes. Automate bidirectional Salesforce data integration, file data preparation, and B2B file data sharing.

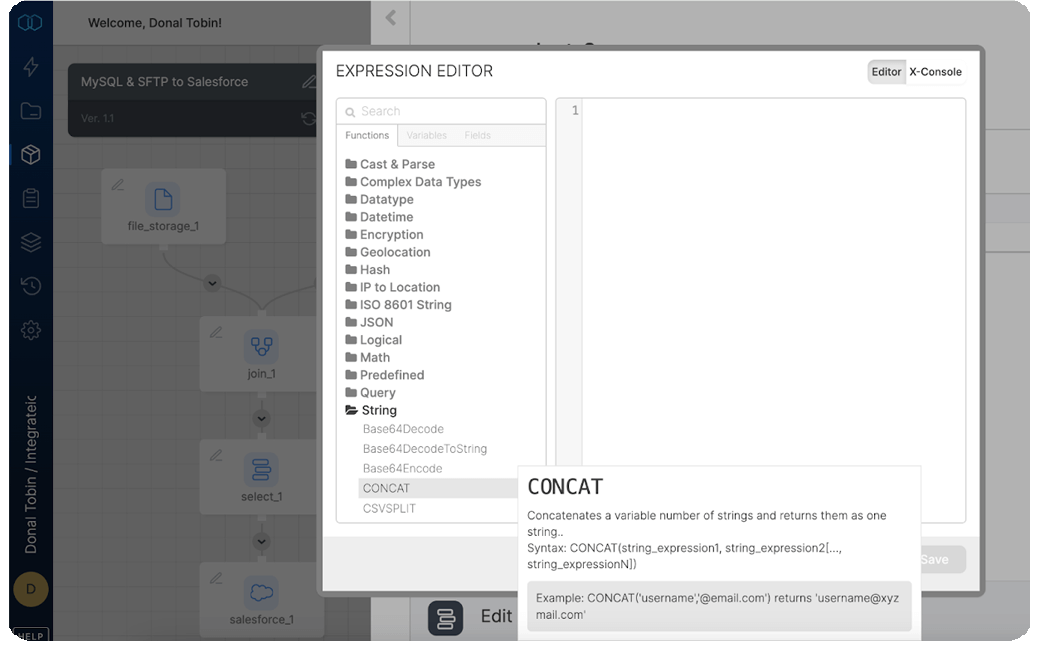

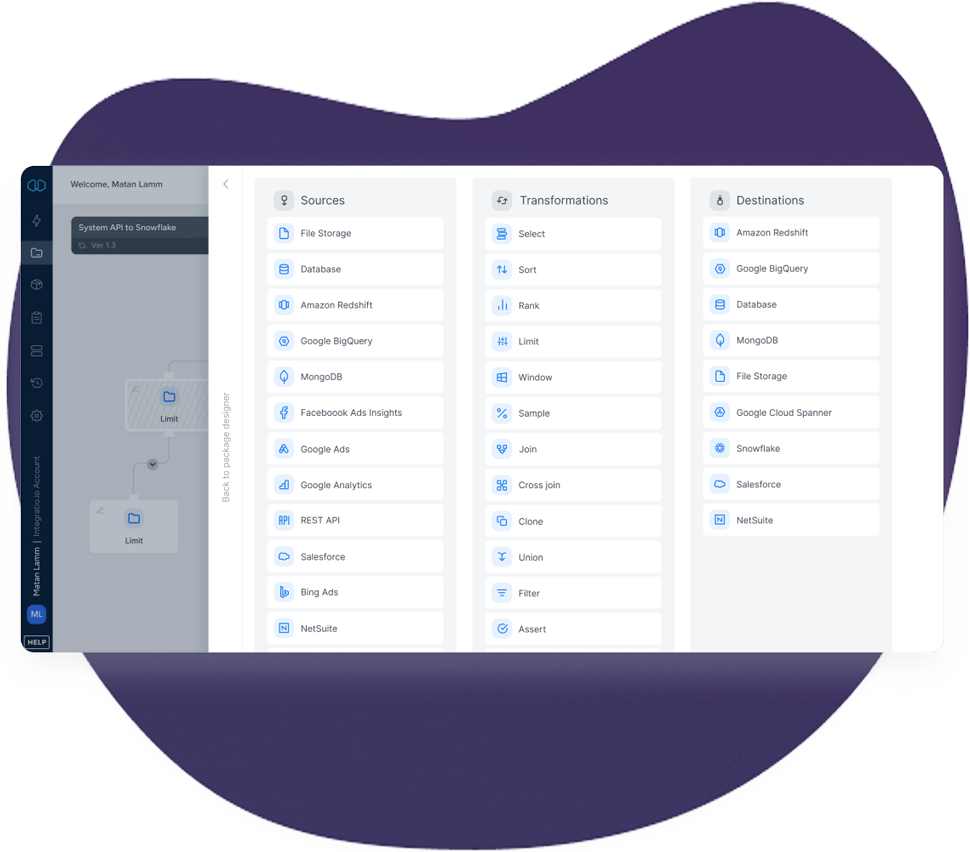

Transform Your Data

No need for SQL or scripting with our low-code data transformation layer. Choose from 220+ table and field-level transformations to get your data the way you want it. Advanced transformations available too for more technical users.

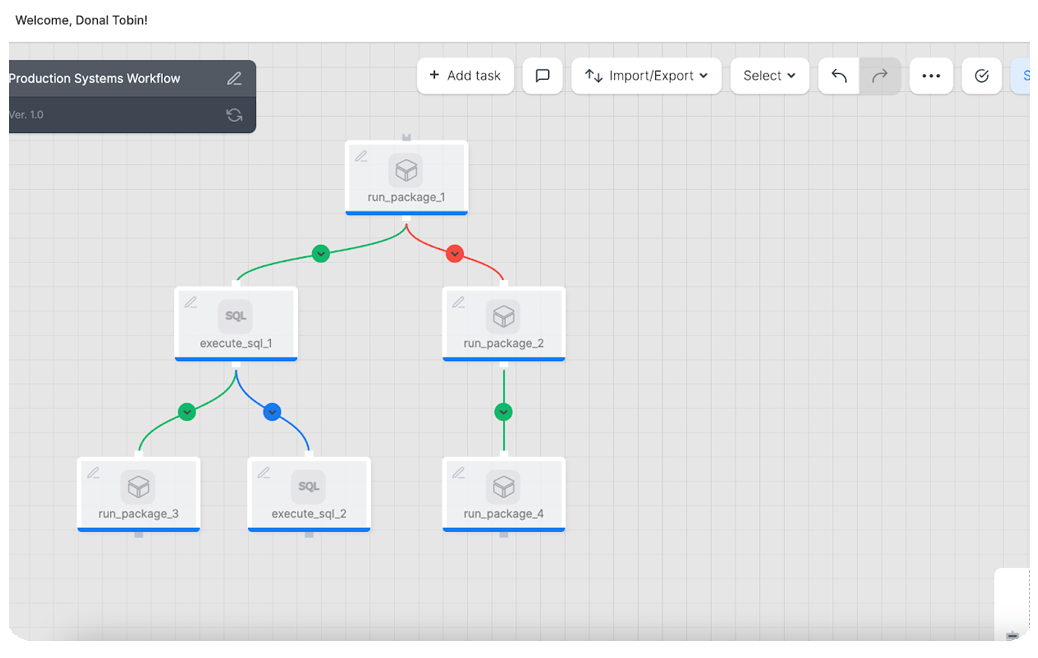

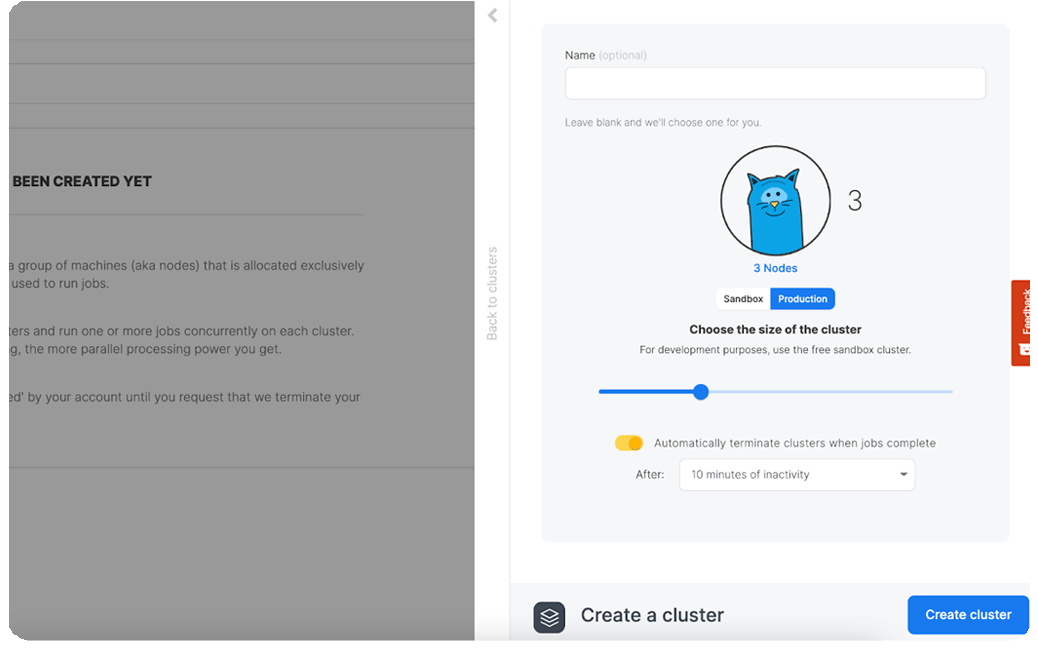

A package can be scheduled to run from every 5 minutes (on Enterprise plan) to whatever time frequency is needed. However, to avoid a build up of incomplete package runs, the schedule frequency should be set to a time greater than the time it takes that given package to run.

In general, up to 3 packages can run concurrently. When there are greater than 3 packages scheduled to run, the remaining packages will run once the cluster resources become available as the packages already running finish.

Yes, additional cluster resources can be purchased ($1,000/cluster/month) to allow more packages to run concurrently and/or to allow packages to run faster where possible.

Yes, we offer both Professional Services plans based on your needs as well as a Cruise Control option where we build and maintain your pipelines for you.

Talk to an Expert

Speak with a Product Expert who can help solve your data challenges