Measuring Hard

ROI of a No-Code

Data Pipeline

Platform

2022 - 2023 Benchmark Study

- Key Benchmark Takeaways

- Quality Data To Actionable Insights

- The Solution

- Efficiencies of a No-Code Data Pipeline Platform

- Results of a No-Code Data Pipeline Platform

- Benefits of Automate API Generation

- A Case Study

You will probably like us if your use case involves

You will probably like us if your use case involves-

Salesforce IntegrationOur bi-directional Salesforce connector rocks!LEARN MORE

-

File Data PreparationAutomate file data ingestion, cleansing, and normalization.LEARN MORE

-

REST API IngestionWe’ve yet come across an API we can’t ingest from!LEARN MORE

-

Database ReplicationPower data products with 60-second CDC replication.LEARN MORE

We may not be a good fit if you’re looking for

We may not be a good fit if you’re looking for-

100s of Native ConnectorsWe’re not in the connectors race, sorry. We do quality, not quantity.

-

Code-heavy SolutionWe exist for users that don’t like spending their days debugging scripts.

-

Self-serve SolutionA Solution Engineer will quickly tell you if we’re a good fit. Then lean on us as much or as little as you like.

-

Trigger-based PipelinesWe don’t do trigger or event-based pipelines. We can schedule ETL pipelines for every 5 minutes and CDC pipelines for every 60-seconds.

-

![Ensure Data Quality]() No-code data pipeline platforms can solve real data access pain points by providing tools to build data pipelines quicker without the need for expensive technical resources.

No-code data pipeline platforms can solve real data access pain points by providing tools to build data pipelines quicker without the need for expensive technical resources.

Significant Hard and Soft costs can be eliminated from data pipelines using a data platform including, decreasing CAC and marketing costs and limiting reliance on costly consultants and support contracts. -

![Ensure Data Quality]() Citizen integrators are leading the way by leveraging new tools to build their own data pipelines. These tools include Automated API Generators and data pipeline platforms.

Citizen integrators are leading the way by leveraging new tools to build their own data pipelines. These tools include Automated API Generators and data pipeline platforms.

Leveraging a single data pipeline platform to support citizen integrators offers a number of benefits that lead to additional conveniences and pipeline visibility that improve experience and data quality. -

![Ensure Data Quality]() Integrate.io has brought to market the first true, complete no-code data platform that is meeting the needs of data teams and citizen integrators alike. By providing all of the necessary data tools and connectors to deliver clean, secure data quickly, now any team can successful harness their data.

Integrate.io has brought to market the first true, complete no-code data platform that is meeting the needs of data teams and citizen integrators alike. By providing all of the necessary data tools and connectors to deliver clean, secure data quickly, now any team can successful harness their data.

As both the number of data sources and types of data companies are working with have grown rapidly, having access to reliable and quality data to make data-driven decisions is an ever-increasing challenge.

Line of business teams such as operations, sales, and marketing all need access to data quickly to make better decisions that lead to informed go-to- market strategies. Unfortunately, the data they need is distributed across multiple data silos such as apps, databases, and data lakes. Without the skills or experience to access and prepare all this data, teams and individuals can’t make the best data-driven decisions.

The fact that many organizations have not successfully implemented a company-wide strategy to deliver the right data to the right people in an efficient and timely manner lies at the core of this all too common problem. While some companies have designated data teams to fill this function, not every company has the luxury of being able to build out a dedicated data team. Even if they can, hiring for this team can be very difficult. For those that can’t build out a data team, preparing data for analytics typically falls under the responsibility of core IT. These teams are often already overwhelmed with requests from across the company as well as trying to focus on their main responsibility - building and maintaining the company’s core offering. This leads to a bottleneck in core IT resulting in teams making decisions that are not based on data.

As firms look to build out or launch a dedicated data team to support line of business decision-makers, the lack of access to true talent in the market makes this very challenging. The diverse skill sets required to build and manage the entire data stack is one of the primary causes. This makes these skilled individuals not only very hard to find but also very costly if you can. Below is a table based on LinkedIn data that shows how few candidates there are in the marketplace for certain technical roles compared to the demand.

-

2.5Data Engineer

-

4.76Data Scientist

-

10.8Web Developer

-

53.8Marketing Manager

Without the resources necessary to access and prepare a company’s data for company-wide analytics and reporting, workarounds eventually get created. This typically starts off with a homegrown and inefficient solution for solving one specific data need. Over time as the volume of these ‘one-off’ requests grow, the company ends up with a hacked together reporting system that is unstable, unreliable, and a nightmare to update and manage. As the data consumers’ needs are not met, they usually revert to the most inefficient and error-prone solution - moving and scrubbing data manually.

At a certain point, the company realizes that they need to introduce a company-wide data strategy for clean and reliable analytics and reporting.

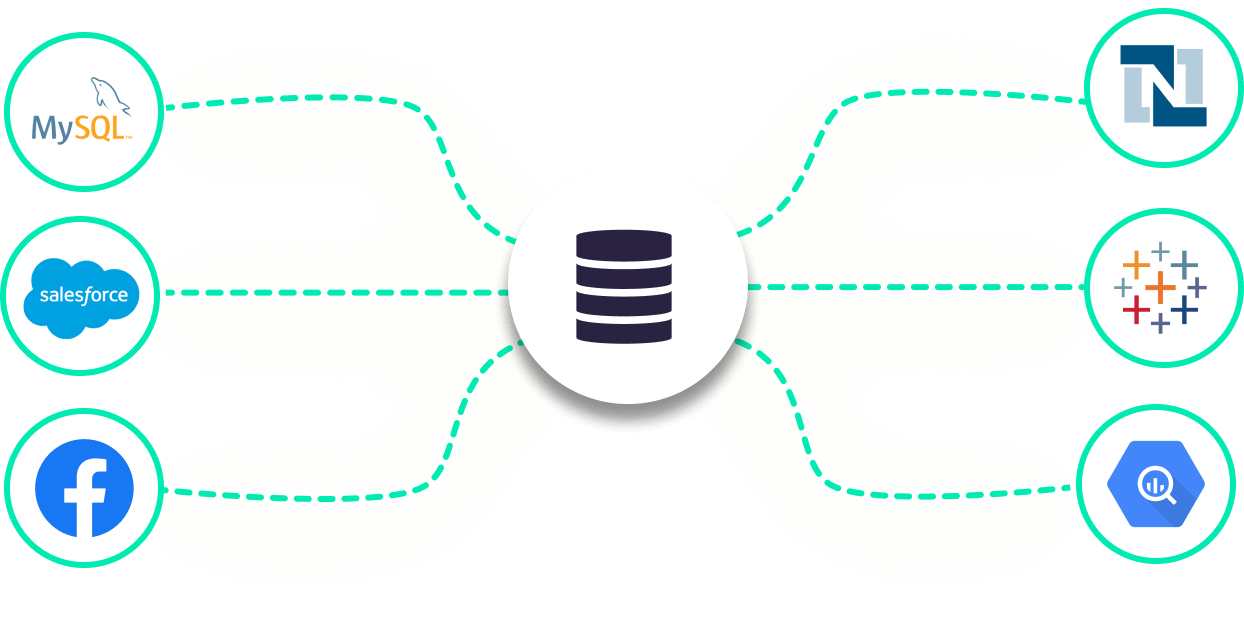

A no-code data pipeline platform can empower line-of-business, non-technical, and Citizen Integrator users to build data pipelines to solve this complex problem of reliable data for analytics and reporting. This is achieved by companies creating a single source of truth by centralizing data from all their different data sources to have one unified view of the business. Data warehouses are commonly used for this purpose. In order to build and maintain this single source of truth, companies must connect their data sources with their data warehouse. This is done using a data pipeline platform.

What do we mean by a data pipeline? A data pipeline is an end-to-end process that moves data from one source (database, CRM, SaaS applications, etc) to another (typically a data warehouse, database, internal app, or analytics dashboard). In these data pipelines, data is moved, transformed, cleansed, and prepared for analytics. With the ever growing volumes of data and applications companies are working with, there are myriad ways to build and architect data pipelines. In order to cover all a company's needs and requirements along its entire data journey, a large number of technologies and skill sets will be required. Given the fact that each technology provides different strengths and capabilities, access to a variety of technologies enables you to create data pipelines that are optimized for each individual use case. Bringing these technologies together to build a data pipeline not only takes time, but it also requires very specific domain expertise.

A platform approach that has these capabilities already baked in reduces the amount of work required to build and manage data pipelines. All-in-one platforms are also easier to use as you don’t need to switch between interoperable apps and tools. A platform approach that provides all the technologies needed across a company’s data pipeline as well as the monitoring and orchestration of these pipelines results in a highly effective and impactful data strategy.

In response to this growing platform need, Integrate.io has brought to market the only true no-code pipeline platform that incorporates all the necessary technologies and tools to solve the needs of data teams and citizen integrators.

Realizing The Efficiencies of a No-code Data Pipeline Platform

The efficiencies of a no-code data pipeline platform are real and quantifiable. In a survey of over 150 of Integrate.io’s clients and prospects, many hard costs and time savings were identified along with opportunities to impact the top line.

Some specific use cases include a reduction in marketing and customer acquisition costs (CAC) and CAC Payback and the elimination of costly third-party resources which had a far-reaching impact on multiple teams. Specific data points cited include.

Another way that organizations can realize efficiencies from a no-code data pipeline platform is through the more effective use of existing resources. In many cases, developers are poached from software engineering teams, who are busy working to get products or services to market, to help build or fix broken data pipelines. By leveraging a no-code platform, companies can keep these resources on core revenue-generating product projects, and empower the line of business users, who are requesting the data, to easily build and maintain their own clean and secure data pipelines.

If you do have some data engineering talent on staff, incorporating a no-code data platform also enables them to compound and maximize their impact. They can focus on the highest value tasks, leaving more mundane tasks such as building and managing data connectors, orchestration, and data security to the data platform. With this approach, data teams can maximize their output and inherent value without increasing the size and cost footprint of the team. In fact, respondents to our survey indicated that on average they shortened their time to market by up to 18 weeks, with the average time to market when using Integrate.io’s data pipeline platform being just 9 weeks.

You will probably like us if your use case involves

You will probably like us if your use case involves-

Salesforce IntegrationOur bi-directional Salesforce connector rocks!LEARN MORE

-

File Data PreparationAutomate file data ingestion, cleansing, and normalization.LEARN MORE

-

REST API IngestionWe’ve yet come across an API we can’t ingest from!LEARN MORE

-

Database ReplicationPower data products with 60-second CDC replication.LEARN MORE

We may not be a good fit if you’re looking for

We may not be a good fit if you’re looking for-

100s of Native ConnectorsWe’re not in the connectors race, sorry. We do quality, not quantity.

-

Code-heavy SolutionWe exist for users that don’t like spending their days debugging scripts.

-

Self-serve SolutionA Solution Engineer will quickly tell you if we’re a good fit. Then lean on us as much or as little as you like.

-

Trigger-based PipelinesWe don’t do trigger or event-based pipelines. We can schedule ETL pipelines for every 5 minutes and CDC pipelines for every 60-seconds.

Users of No-Code Data Pipeline Platforms Save Time

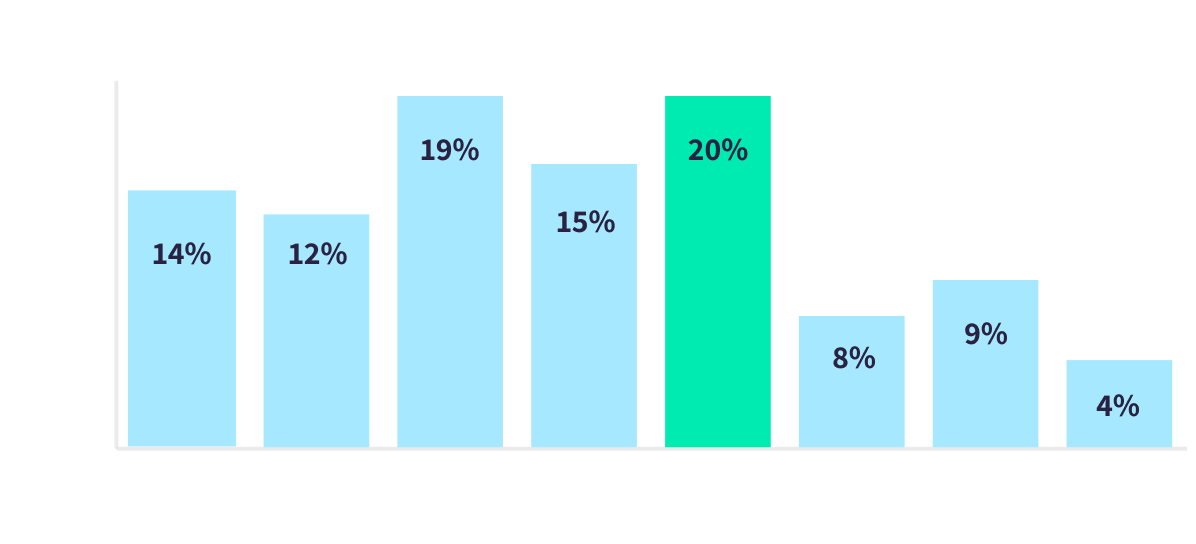

Respondents interviewed for this study were asked, How much faster is your data team creating and releasing integrations/pipelines using Integrate.io vs. building them internally? Of the thirteen participants that responded, on average they reported they were almost 3X as fast ( 2.7 times faster), and answers ranged from 1.4 to 5. In our survey of Integrate.io users, we also asked respondents to estimate how 20% many hours per month engineers and or marketers saved using the Integrate.io platform. Of the twenty-three participants, the average response was 44 hours per month.

The report also found that increases in productivity have a direct and proportional effect on cost savings. While respondents provided data on improved efficiency, they also provided an estimate of how these productivity gains translate to real cost savings. On average respondents reported saving close to $200,000 per year ($187,000 to be exact). 60% of respondents reported saving between $100,000 and $200,000 per year.

DOWNLOAD PDFdata pipeline platform per Month

Realizing the Benefits of

Automated API Generation

REST APIs are a vital component of data pipelines. They enable applications to ingest data as well as make it available to other applications. Standardized REST APIs make accessing data anywhere in the cloud a simple process enabling tools and analytics applications to easily access data and drive insights. This is why APIs are so important to the data ecosystem. They are the foundation of interconnected databases, applications, and automation processes. By publishing APIs, anyone with the correct authentication (either third parties or people within your organization) can generate value from your data and support data pipelines that lead to more efficient operations.

Building APIs Takes Time

Not only are APIs expensive to build, but they also take valuable dev team time. This takes developers away from other mission and revenue-critical projects. Our data found that almost 40% of respondents took more than 250 days to build a single API, with the average being 120 days per API.

With good developers very hard to find as previously discussed, reducing the time they spend just on API development enables them to focus on more important revenue-generating tasks.

What Drives API Costs?

The effort spent to build API’s is not spread evenly across all processes. Most of the time spent building APIs is in designing, prototyping, and developing a minimum viable product (MVP) of each API. Our data also revealed that only 25% of the process was spent integrating features such as monitoring, transaction management, and logging capabilities."

The More APIs you Build,

the More You Benefit from

a Platform

Significant savings can be realized by using a platform to automatically build secure APIs. Based on cost averages identified above in figure 3, the average organization could save $45,719 per API using Integrate.io. The data also shows 75% of respondents could realize a positive ROI with the use of a platform to build a single API. This number jumps to 80% when you consider the number of respondents who are interested in building more than one API. Typically, organizations have a variety of data sets that can be leveraged for greater value. Consequently, companies are building more than one API. 42% of respondents plan to build more than one API.

Soft Costs

While there are many quantifiable benefits to implementing a no-code data pipeline platform, there are also some real gains that are much harder to measure. For example, elevating talented and scarce data specialists and removing them from manually working with data will lead to better retention. No one with advanced skills wants to spend hours manually manipulating data.

The fact that decision-makers will have access to data that has been transformed into insights, better decisions can be made. These decisions can also be made faster. No need to wait for IT departments to pull and transform data. Consistently making better decisions will translate into continuous performance improvements.

Data is captured from external data sources and exported outside of the company. Additionally, 18 files were created for SFDC and SQL Server and were manually integrating data through DataLoader, Salesforce Connect, and Imports with SQL. Some of these jobs have multiple integrated steps that must execute in order. With Integrate.io, several jobs are now automated, with the goal of creating automated processes for all 50 jobs and 3 connectors.

- Sources & Destinations: SFTP’s, Salesforce CRM, & SQL Server databases

- 5 Months to implementation with 1 resource to create:

- 10 workflows

- <50+ jobs/data pipelines

- 3 data connectors used

- Over Achieved planned timelines for implementation

- Simplicity Matters: Utilizing ETL software solutions instead of investing heavily in internal custom code or by manipulating data into their platforms.

- A Holistic View Is Best: Learning they need to understand how things are architected and engineered as new use cases can turn out different than you expect.

- Agility = Success: Ability to learn a new system quickly to enable faster data pipeline development.

A Case Study: How a No-Code Data Pipeline Saves A Leading American Engineering Council $150,000 Every Year

A health plan financial trust provides insurance to participating member firms and works with two insurance carriers giving firms within certain states the ability to use them as a partner for benefits administration through their insurance carriers.

Use Case

This company needed to move data to address two different use cases. The first, ingesting carrier reported data and administering to value-added vendors. The second, using the data internally to gain visibility into customers, create analytics and handle account management and program administration through their CRM and database systems.

Currently, they capture data from 5 external carriers, export data to one carrier, and plan to add more in the future. Internal data movement is also required to perform file-based services between SFTPs, Salesforce CRM, and SQL Server databases, creating a lot of moving parts that are required for different reporting purposes within the organization. Doing this successfully required an agile environment to accommodate changes in their data pipeline needs.

Challenges

Originally, this company implemented a complex data integration and API solution that required highly specific and technical skill sets to implement, requiring additional costly software and development support. With tight resources and one dedicated data person to manage data pipelines, this approach lacked long-term feasibility. Overall, they were investing an additional $100,000 to $150,000 in support contracts to manage their data pipelines, which was eating away at their IT budget. Although this solution provided a robust toolset, IT wanted to become more lean and ensure that its budget was spent more broadly. To take into account their expanding business and changing needs, IT also wanted to manage and deploy data pipelines in a more agile and self-service way.

They also struggled with automating the movement of data in and out of their environments in an easy, automated way. This caused challenges with data manipulation and data cleanliness for both in-flight data and data stored within target destinations. Basically, with so many data sources and moving parts, it was difficult to gain overall visibility in a single source of truth without scrubbing and transporting data. All of these challenges caused them to begin the search for a broader ETL platform to support their expanding requirements.

The Solution - And Outcomes

Enter Integrate.io. Integrate.io was selected due to its self-service nature and automation. With one person to create, maintain, and manage jobs and data pipelines, leveraging a self-service solution using a low-code/no-code interface was essential for a successful deployment with limited overhead. This included enabling more automation and reuse across source systems.

Initially, the lead IT resource created a 5 month project plan to build and test their new data ecosystem and lifecycles with hands-off automation. This included 50 jobs that were put into sandboxes for full utilization testing and then automatically moved to production. Due to the ability to automate data pipelines and system processes, The company was able to realize its goals and cut down on time spent on data pipeline creation.

They realized cost savings between $100,000 and $150,000 yearly with its implementation. Integrate.io saved the organization upwards of $50,000 in annual software costs. Due to automation and its self-service platform, the organization also eliminated $50,000 - $100,000 in support costs. Between cost savings and enabling broader automation across the data ecosystem, they were able to achieve faster time to value, quicker access to data, and improved scalability. This enabled a more centralized view across multiple systems, supporting better decision making.

Talk to an Expert

Speak with a Product Expert who can help solve your data challenges